MATH2331 Linear Algebra

The Class

4 quizzes (open more than 24 hours) open on Thursday

Exams are timed (65-70 minute test)

Final Exam (not cumulative) on August 19th

1.1 Introduction to Linear Systems

Background

$\mathbb{R}$ = All real numbers $(-\infty, \infty)$

$\mathbb{R}^{2}$ = xy-plane

$\mathbb{R}^{n}$ = Vector space. All $(x_1, x_2, …, x_n)$

Single variable Functions:

Linear: $f(x) = 5x,\ f(x) = ax$

Non-linear: $f(x) = x^{2} + \cos (x),\ f(x) = e^{x},\ f(x) = \tan ^{-1}(x)$

Multi-variable Functions:

Linear: $f(x,\ y) = ax + by,\ f(x,\ y,\ z) = 5x + 3y + bz$

Non-linear:

Equations:

$5 = 4x$

A linear equation in the variables $x_1,\ x_2,\ x_3,\ …,\ x_n$ is an equation of the form $a_1x_1 + a_2x_2 + x_3x_3 + … a_nx_n = b$ where $a_1,\ a_2,\ …,\ a_n$ are real numbers

A linear system (or system of linear equations) is a collection of linear equations in same variables $x_1,\ x_2,\ x_3,\ …, x_n$.

Example

$\begin{vmatrix} x & +3y & = 1 \\ x & -y & =9 \end{vmatrix} \overset{L_2 = -2 L_1 + L_2}{\implies} \begin{vmatrix} x & +3y & =1 \\ 0 & -7y & =7 \end{vmatrix} \overset{L_2 = -\frac{1}{7} L_2}{\implies} \begin{vmatrix} x & +3y & =1 \\ 0 & y & =-1 \end{vmatrix}$

$\overset{L_1 = -3 L_2 + L_1}{\implies} \begin{vmatrix} x & = 4 \\ y & = -1 \end{vmatrix}$

Example

$\begin{vmatrix} x & + 3y & =2 \\ -2x & -6y & =-4 \end{vmatrix} \overset{L_2 = 2L_1 + L_2}{\implies} \begin{vmatrix} x & +3y & = 2 \\ & 0 & = 0 \end{vmatrix}$

Solutions form the line $x+3y=2$. Infinitely many solutions.

Example

Example:

$\begin{vmatrix} x & +y & & = 0 \\ 2x & -y & + 3z & = 3 \\ x & -2y & -z & =3 \end{vmatrix} \overset{\overset{L_2 = -2L_1 + L_2}{L_3 = -L_1 + L_3}}{\implies} \begin{vmatrix} x & +y & &=0 \\ & -3y & +3z & = 3 \\ & -3y & -z & =3 \end{vmatrix}$

$\overset{L_2 = L_2 -\frac{1}{3}}{\implies} \begin{vmatrix} x & +y & & = 0 \\ & y & -z & =-1 \\ & & z & =0 \end{vmatrix} \overset{L_3 = 3L_2 + L_3}{\implies} \begin{vmatrix} x & +y & & =0 \\ & y & -z & -1 \\ & & -4z & = 0 \end{vmatrix}$

$\overset{L_3 = -\frac{1}{4} L_3}{\implies} \begin{vmatrix} x & +y & =0 \\ & y & -z & = -1 \\ & & z & =0 \end{vmatrix} \overset{L_2 = L_3 + L_2}{\implies} \begin{vmatrix} x & + y & & =0 \\ & y & & =-1 \\ & & z & =0 \end{vmatrix}$

$\overset{L_1 = L_1 - L_2}{\implies} \begin{vmatrix} x & =1 \\ y & =-1 \\ z &=0 \end{vmatrix}$

Solution $(x,\ y,\ z) = (1,\ -1,\ 0)$

Example

$\begin{vmatrix} x & + y & + z & =2 \\ & y & +z & =1 \\ x & +2y & 2z & =3 \end{vmatrix} \overset{L_3 = -L_1 + L_3}{\implies} \begin{vmatrix} x & +y & +z & = 2 \\ & y & + z & =1 \\ & y & +z & =1 \end{vmatrix}$

$\overset{L_3 = -L_2 + l_3}{\implies} \begin{vmatrix} x & +y & +z & =2 \\ & y & +z & =1 \\ & & 0 & =0 \end{vmatrix} \overset{L_1 = -L_2 + L_1}{\implies} \begin{vmatrix} x & & & =1\\ & y & +z & =1\\ & & 0 & =0 \end{vmatrix}$

This example has a free variable. Let $z=t$.

Solution: $(x,\ y,\ z) = (1,\ 1-t,\ t)$. Has infinitely many solutions.

$y + z = 1 \implies y = 1 -t$

Example

$\begin{vmatrix} x & + y & + z & =2 \\ & y & + z & =1 \\ & 2y & + 2z & =0 \end{vmatrix} \overset{L_3 = -2L_2 + L_3}{\implies} \begin{vmatrix} x & +y & +z & =2 \\ & y & + z & =1 \\ & & 0 & =-2 \end{vmatrix}$

No solutions.

How many solutions are possible to a system of linear equations?

Answer:

- 0 Solutions

- 1 Solution

- Infinitely many solutions

(This is because planes cannot curve)

Geometric Interpretation

A linear equation $ax + by = c$ defined a line in $\mathbb{R}^{2}$

Solutions to a linear system are intersections of lines in $\mathbb{R}^{2}$.

- 0 Points (Solutions)

- 1 Point (Solution)

- $\infty$ many points (Solutions) if they are the same line

A linear equation $ax + by + cz = d$ defined a plane in $\mathbb{R}^{3}$.

Solutions to a linear system are intersections of (hyper) planes in $\mathbb{R}^{3}$.

- 0 Points (Solutions)

- 1 Point (Solution)

- $\infty$ many points (Solutions): All the planes contain a line. Also if all planes could be the same plane.

Example

Find all polynomials $f(t)$ of degree $\le 2$.

- Whose graph run through (1, 3) and (2, 6) and

- Such that $f^{\prime}(1) = 1$

- Use $f(t) = a + bt + ct^{2}$

We know

- $f(1) = 3 \implies a + b + c = 3$

- $f(2) = 6 \implies a + 2b + 4c = 6$

- $f’(t) = b + 2ct$

- $f’(1) = 1 \implies b + 2c = 1$

$\begin{vmatrix} a & +b & + c & =3 \\ a & +2b & +4c & =6 \\ & b & +2c & =1 \end{vmatrix} \overset{L_2 = -L_1 + L_2}{\implies} \begin{vmatrix} a & +b & +c & =3\\ & b & +3c & =3 \\ & b & +2c & =1 \end{vmatrix}$

$\overset{L_3 = -L_2 + L_3}{\implies} \begin{vmatrix} a & +b & +c & =3 \\ & b& +3c & =3 \\ & & c & =2 \end{vmatrix} \overset{\overset{L_2 = -3L_3 + L_2}{L_1 = -L_3 + L_1}}{\implies} \begin{vmatrix} a & +b & =1\\ & b & -3\\ & c & =2 \end{vmatrix}$

$\overset{L_1 = L_1 - L_2}{\implies} \begin{vmatrix} a & =4 \\ b & =-3 \\ c & =2 \end{vmatrix}$

$f(t) = 4 - 3t + 2t^{2}$

1.2 Matrices, Vectors, and Gauss-Jordan Elimination

$\begin{vmatrix} x & +2y & +3z & =1 \\ 2x & +4y & +7z & =2 \\ 3x & +7y & +4z & =8 \end{vmatrix}$

We can store all information in this linear system in a matrix which is a rectangular array of numbers.

Augmented Matrix:

$\begin{bmatrix} 1 & 2 & 3 & \bigm| & 1 \\ 2 & 4 & 7 & \bigm| & 2 \\ 3 & 7 & 11 & \bigm| & 8 \end{bmatrix} $

3 row and 4 column = 2x4 matrix

Coefficient Matrix:

$\begin{bmatrix} 1 & 2 & 3 \\ 2 & 4 & 7 \\ 3 & 7 & 1 \end{bmatrix}$

3 x 3 matrix

Generally, we have

$A = [a_{ij}] = \begin{bmatrix} a_{11} & a_{12} & a_{13} & \cdots & a_{1m} \\ a_{21} & a_{22} & a_{23} & \cdots & a_{2m} \\ \vdots & \vdots & \vdots & \ddots & \vdots \\ a_{n_1} & a_{n2} & a_{n3} & \cdots & a_{nm} \end{bmatrix} $

Here, $A$ is $n\times m$ (n rows and m columns).

For square $n \times n$ matrices:

Diagonal: $a_{ij}$ for $i \neq j$

Lower triangular: $a_{ij} = 0$ for $i < j$

Upper triangular: $a_{ij} = 0$ for $i > j$

Identity matrix $I_n$: square $n\times n$ diagonal ($a_{ij} = 0$ for $i \neq j$ ) and $a_{ii} = 1$ for $1 \le i = n$

$I_3 = \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix} $

0 Matrix: Any size; all entries are 0

$\begin{bmatrix} 0 & 0 & 0 & 0 & 0 \\0 & 0 & 0 & 0 & 0 \end{bmatrix}$

Above is a $2\times 5$ 0-Matrix

Columns of an $n \times m$ matrix form vectors in $\mathbb{R}^{n}$. Example:

\[\begin{bmatrix} 1 & 2 & 3 & \Bigm| & 1 \\ 2 & 4 & 7 & \Bigm| & 2 \\ 3 & 7 & 11 & \Bigm| & 8 \end{bmatrix}\]We can represent vectors as the columns:

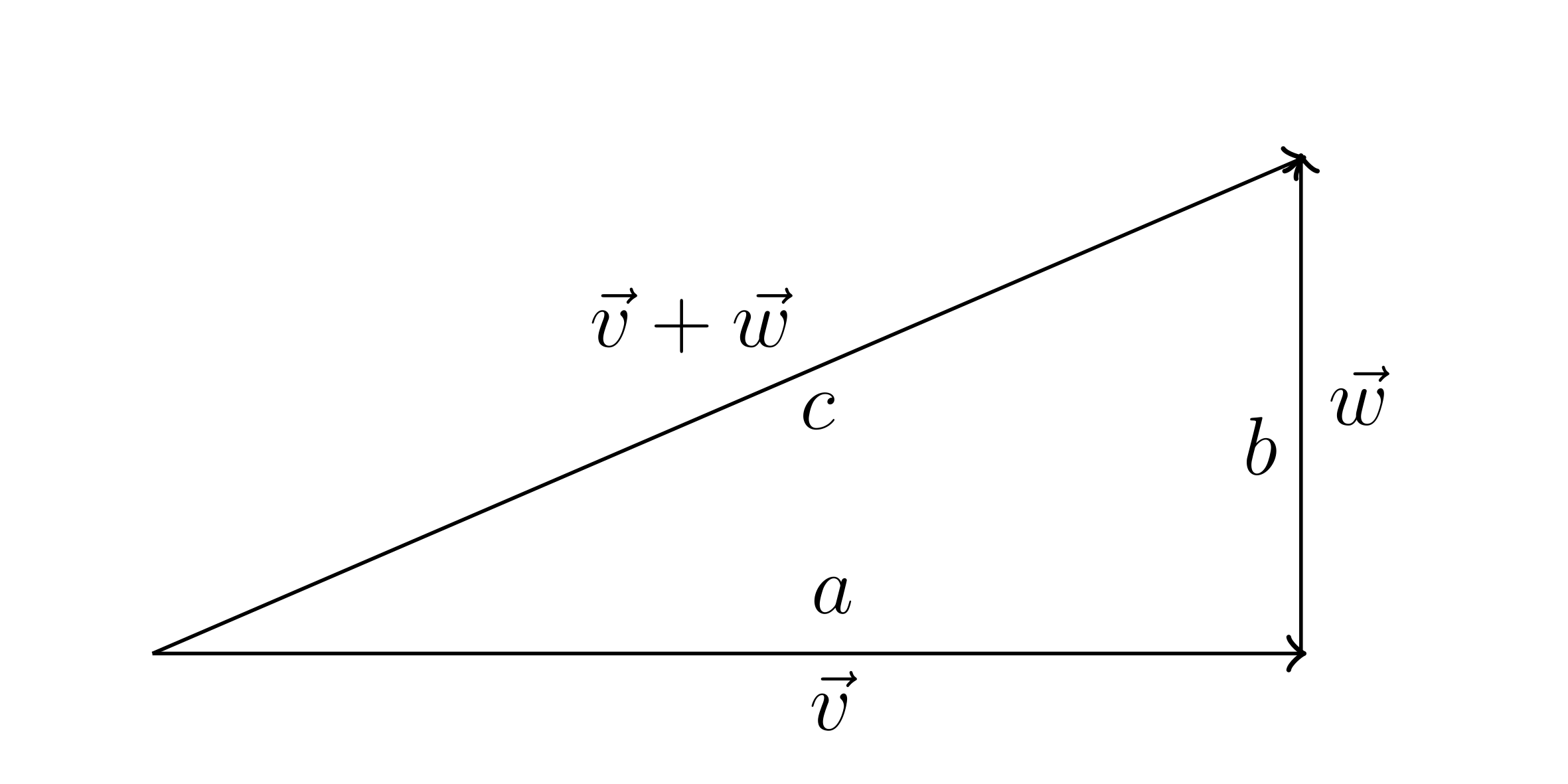

\[\begin{bmatrix} 1 \\ 2 \end{bmatrix} , \begin{bmatrix} 3 \\ 1 \end{bmatrix} , \begin{bmatrix} 1 \\ 9 \end{bmatrix} , \text{ in } \mathbb{R}^2\]This is the standard representation for a vector in $\mathbb{R}^{n}$. A vector as an arrow starting at origin and ending at corresponding point.

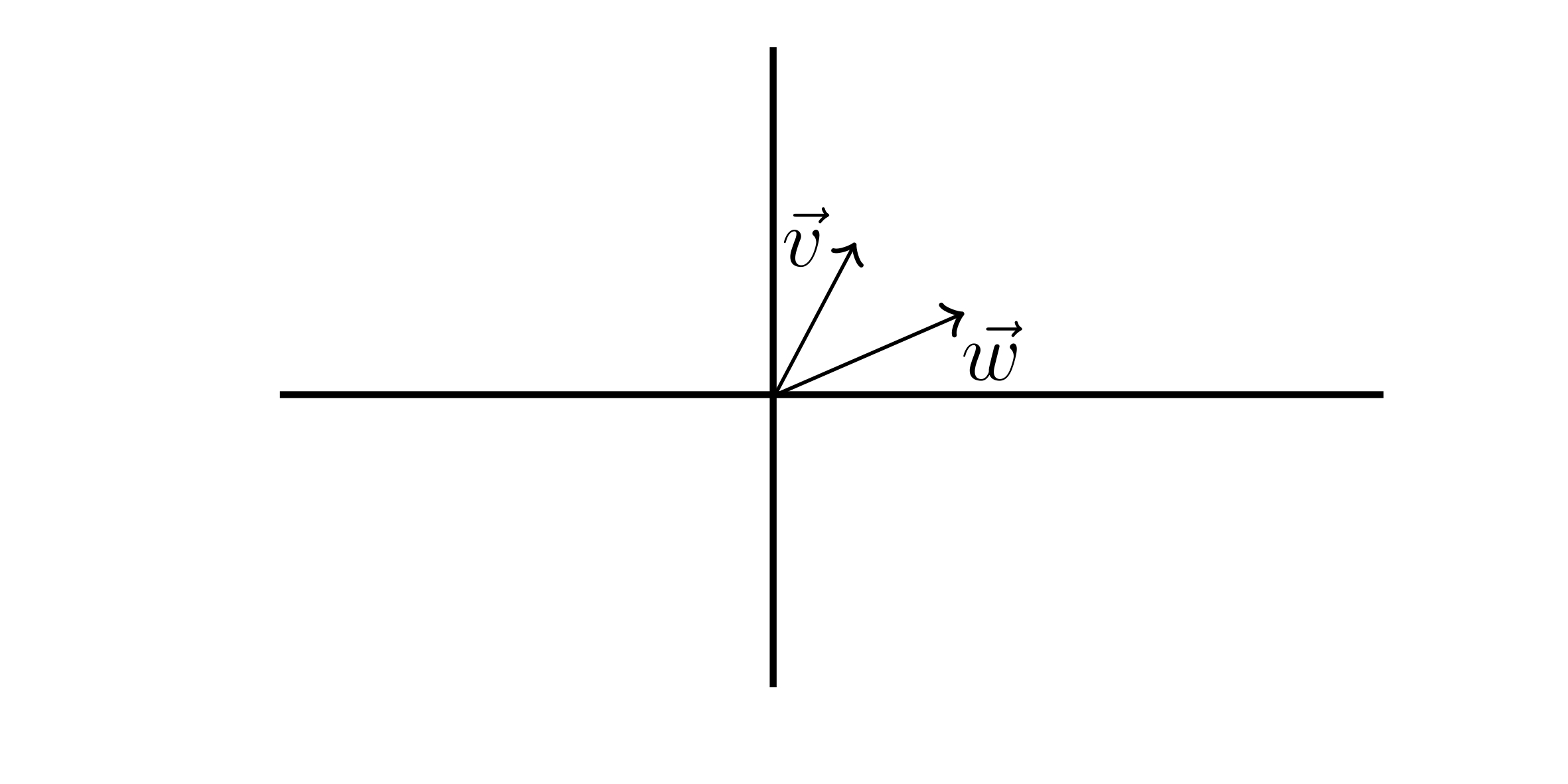

Consider the two vectors:

\[\vec{v} = \begin{bmatrix} 1 \\ 2 \end{bmatrix} , \vec{w} = \begin{bmatrix} 3 \\ 1 \end{bmatrix} \text{ in } \mathbb{R}^2\]

We may use 3 elementary row operations

- Multiply/divide a row by a nonzero constant

- Add/subtract a multiple of one row to another

- Interchange two rows

Solving the system of linear equations:

Example

\[\begin{bmatrix}

1 & 2 & 3 & \Bigm| & 1 \\

2 & 4 & 7 & \Bigm| & 2 \\

3 & 7 & 11 & \Bigm| & 8

\end{bmatrix}

\overset{\overset{-2R_1 + R2}{-3R_1 + R_3}}{\implies}

\begin{bmatrix}

1 & 2 & 3 & \Bigm| & 1 \\

0 & 0 & 1 & \Bigm| & 0 \\

0 & 1 & 2 & \Bigm| & 5 \\

\end{bmatrix}

\overset{R_2 \leftrightarrow R_3}{\implies}

\begin{bmatrix}

1 & 2 & 3 & \bigm| & 1 \\

0 & 1 & 2 & \bigm| & 5 \\

0 & 0 & 1 & \bigm| & 0

\end{bmatrix}\]

\[\overset{\overset{-3R_3 + R_1}{-2R_3 + R_2}}{\implies}

\begin{bmatrix}

1 & 2 & 0 & \bigm| & 1 \\

0 & 1 & 0 & \bigm| & 5 \\

0 & 0 & 1 & \bigm| & 0

\end{bmatrix}

\overset{-2R_2 + R_1}{\implies}

\begin{bmatrix}

1 & 0 & 0 & \bigm| & -9 \\

0 & 1 & 0 & \bigm| & 5 \\

0 & 0 & 1 & \bigm| & 0

\end{bmatrix}

\text{ identity matrix}\]

\[\therefore

\begin{bmatrix}

x \\

y \\

z

\end{bmatrix}

=

\begin{bmatrix}

-9 \\

5 \\

0

\end{bmatrix}\]

Example

\[\begin{bmatrix}

1 & -1 & 1 & \bigm| & 0 \\

1 & 0 & -2 & \bigm| & 2 \\

2 & -1 & 1 & \bigm| & 4 \\

0 & 2 & -5 & \bigm| & 4

\end{bmatrix}

\overset{\overset{-R_1 + R_2}{-2R_1 + R_3}}{\implies}

\begin{bmatrix}

1 & -1 & 1 & \bigm| & 0 \\

0 & 1 & -3 & \bigm| & 2 \\

0 & 1 & -1 & \bigm| & 4 \\

0 & 2 & -5 & \bigm| & 4

\end{bmatrix}\]

\[\overset{\overset{-R_2 + R_3}{-2R_2 + R_4}}{\implies}

\begin{bmatrix}

1 & -1 & 1 & \bigm| & 0 \\

0 & 1 & -3 & \bigm| & 2 \\

0 & 0 & 2 & \bigm| & 2 \\

0 & 0 & 1 & \bigm| & 0 \\

\end{bmatrix}

\overset{R_3 \leftrightarrow R_4}{\implies}

\begin{bmatrix}

1 & -1 & 1 & \bigm| & 0 \\

0 & 1 & -3 & \bigm| & 2 \\

0 & 0 & 1 & \bigm| & 0 \\

0 & 0 & 2 & \bigm| & 2 \\

\end{bmatrix}\]

\[\overset{-2R_3 + R_4}{\implies}

\begin{bmatrix}

1 & -1 & 1 & \bigm| & 0 \\

0 & 1 & -3 & \bigm| & 2 \\

0 & 0 & 1 & \bigm| & 0 \\

0 & 0 & 0 & \bigm| & 2 \\

\end{bmatrix}\]

No solutions

Example

\[\begin{bmatrix}

x_1 & x_2 & x_3 & x_4 & x_5 \cdots \\

\vdots & \vdots & \vdots & \vdots & \ddots

\end{bmatrix}

=

\begin{bmatrix}

1 & -7 & 0 & 0 & 1 & \bigm| & 3 \\

0 & 0 & 1 & 0 & -2 & \bigm| & 2 \\

0 & 0 & 0 & 1 & 1 & \bigm| & 1

\end{bmatrix}\]

This is already as far as we can go with row operations, but we have two free variables. They are $x_2$ and $x_5$.

We can say that

$x_2 = t$

$x_5 = s$

$x_1 = 3 + 7t - s$

$x_3 = 2 + 2s$

$x_4 = 1 - s$

\[\begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \\ x_5 \end{bmatrix} = \begin{bmatrix} 3 + 7t - 5 \\ t \\ 2 + 2s \\ 1 - s \\ s \end{bmatrix}\]

Example

\[\begin{bmatrix}

1 & 1 & 2 & \bigm| 0 \\

2 & -1 & 1 & \bigm| 6 \\

4 & 1 & 5 & \bigm| 6 \\

\end{bmatrix}

\overset{\overset{-R_1 + R_2}{-4R_1 + R_3}}{\implies}

\begin{bmatrix}

1 & 1 & 2 & \bigm| & 0 \\

0 & -3 & -3 & \bigm| & 6 \\

0 & -3 & -3 & \bigm| & 6

\end{bmatrix}\]

\[\overset{\left( -\frac{1}{3} \right) R_2}{\implies}

\begin{bmatrix}

1 & 1 & 2 & \bigm| & 0 \\

0 & 1 & 1 & \bigm| & -2 \\

0 & -3 & -3 & \bigm| & 6

\end{bmatrix}

\overset{3R_2 + R_3}{\implies}

\begin{bmatrix}

1 & 1 & 2 & \bigm| & 0 \\

0 & 1 & 1 & \bigm| & -2 \\

0 & 0 & 0 & \bigm| & 0

\end{bmatrix}\]

\[\overset{-R_2 + R_1}{\implies}

\begin{bmatrix}

1 & 0 & 1 & \bigm| & 2 \\

0 & 1 & 1 & \bigm| & -2 \\

0 & 0 & 0 & \bigm| & 0

\end{bmatrix}\]

$z=t$ (free variable)

$x = 2-t$

$y= -3 - t$

\[\begin{bmatrix} x \\ y \\ z \end{bmatrix} = \begin{bmatrix} 2 -t\\ -2-t\\ t \end{bmatrix}\]Reduced Row Echelon Form (rref)

Defintion: An $n\times m$ matrix is in reduced row echelon form (rref) provided:

- If a row has nonzero entries, the first nonzero entry is a 1, called leading 1 or pivot.

- If a column contains a leading 1, then all other entries in column are zero.

- If a row contains a leading 1,then each row above has a leading 1 and to the left.

Examples of matrices in reduced row echelon form:

\[\begin{bmatrix} 1 & -7 & 0 & 0\\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 \end{bmatrix} , \begin{bmatrix} 1 & 0 & 5 & 2\\ 0 & 1 & 2 & 7 \\ 0 & 0 & 0 & 0 \\ \end{bmatrix} , \begin{bmatrix} 1 & 2 & 5 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix} , \begin{bmatrix} 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 \\ 0 & 0 & 0 & 0 \\ \end{bmatrix}\]Row echelon form (ref)

Differences:

- Leading entry (pivot position) in a row can be anything

- No restriction on entries above a leading entry in a column

Using the 3 elementary row operations, we may transform any matrix to one in rref (also ref). This method of solving a linear system is called Guass-Jordan Elimination.

1.3 On the Solutions of Linear Systems: Matrix Algebra

Consider the augmented matrices:

ref with 1 unique solution: $\begin{bmatrix} 2 & 0 & 0 & \bigm| & -3 \\ 0 & 3 & 0 & \bigm| & 3 \\ 0 & 0 & 1 & \bigm| & 14\end{bmatrix} $

rref with infinitely many solutions: $\begin{bmatrix} 1 & 0 & 0 & 0 & 1 & \bigm| & -1 \\ 0 & 1 & 0 & 0 & 1 & \bigm| & 0 \\ 0 & 0 & 1 & 1 & 0 & \bigm| & 2\end{bmatrix} $

ref with 1 unique solution: $\begin{bmatrix} 0 & 0 & 0 & \bigm| & 4 \\ 0 & 1 & 2 & \bigm| & 4 \\ 0 & 0 & 3 & \bigm| & 6 \\ 0 & 0 & 0 & \bigm| & 0 \\ 0 & 0 & 0 & \bigm| & 0 \\ \end{bmatrix}$

ref with no solutions: $\begin{bmatrix} 1 & 0 & 0 & \bigm| & 3 \\ 0 & 1 & 0 & \bigm| & -1 \\ 0 & 0 & 2 & \bigm| & 4 \\ 0 & 0 & 0 & \bigm| & 10 \\ \end{bmatrix}$

A linear system is

- consistent provided it has at least one solution

- inconsistent provided it has no solutions

Theorem:

- A linear system is inconsistent if and only if a row echelon form (ref) of its augmented matrix has a row $\begin{bmatrix} 0 & 0 & 0 & \cdots & 0 & \bigm| & c \end{bmatrix}$ where $c\neq 0$.

- A linear system is consistent then we have either:

- A unique solution or

- Infinitely many solutions (at least one free variable)

Rank

The rank of a matrix $A$, denoted rank(A) is the number of leading 1’s in rref(A) (the reduced row echelon form of $A$).

Example

ref

\[\begin{bmatrix} 2 & 0 & 0\\ 0 & 3 & 0 \\ 0 & 0 & 1 \end{bmatrix}\]Has a rank of 3 (3x3)

Example

rref:

\[\begin{bmatrix} 1 & 0 & 0 & 0 & 1 \\ 0 & 1 & 0 & 0 & 1 \\ 1 & 0 & 1 & 1 & 0 \\ \end{bmatrix}\]Has a rank of 3 (3x5)

Example

ref: \(\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 2 \\ 0 & 0 & 3 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ \end{bmatrix}\)

Rank of 3 (5x3)

Example

rref: \(\begin{bmatrix} 1 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ \end{bmatrix}\)

Rank of 1 (3x3)

Example

rref: \(\begin{bmatrix} 0 & 0 & 1 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 & 4 & 0 \\ 0 & 0 & 0 & 0 & 0 & 1 \\ 0 & 0 & 0 & 0 & 0 & 0 \\ \end{bmatrix}\)

rank of 3 (4x6 matrix)

Example

\[\begin{bmatrix}

3 & 3 & 3 \\

3 & 3 & 3

\end{bmatrix}

\overset{\frac{1}{3} R_1}{\implies}

\begin{bmatrix}

1 & 1 & 1 \\

3 & 3 & 3

\end{bmatrix}

\overset{-3R_1 + R_2}{\implies} \text{rref}:

\begin{bmatrix}

1 & 1 & 1 \\

0 & 0 & 0

\end{bmatrix}\]

This matrix has a rank of 1.

Example

\[\begin{bmatrix}

1 & 1 & 1 \\

1 & 2 & 3 \\

1 & 3 & 6 \\

0 & 0 & 0

\end{bmatrix}

\overset{\overset{R_2 - R_1}{-R_1 + R_3}}{\implies}

\begin{bmatrix}

1 & 1 & 1 \\

0 & 1 & 2 \\

0 & 2 & 5 \\

0 & 0 & 0

\end{bmatrix}

\overset{R_3 - 2R_2}{\implies}

\begin{bmatrix}

1 & 1 & 1 \\

0 & 1 & 2 \\

0 & 0 & 1 \\

0 & 0 & 0

\end{bmatrix}\]

The rank of this matrix is 3.

Example

\[C =

\begin{bmatrix}

0 & 1 & a \\

-1 & 0 & b \\

-a & -b & 0

\end{bmatrix}

\overset{R_1 \leftrightarrow R_2}{\implies}

\begin{bmatrix}

-1 & 0 & b \\

0 & 1 & a \\

-a & -b & 0

\end{bmatrix}

\overset{-1 \times R_1}{\implies}

\begin{bmatrix}

1 & 0 & -b \\

0 & 1 & a \\

-a & -b & 0

\end{bmatrix}\]

\[\overset{aR_1 + R_3}{\implies}

\begin{bmatrix}

1 & 0 & -b \\

0 & 1 & a \\

0 & -b & -ab

\end{bmatrix}

\overset{bR_2 + R_3}{\implies}

\begin{bmatrix}

1 & 0 & -b \\

0 & 1 & a \\

0 & 0 & 0

\end{bmatrix}\]

Rank is 2.

Suppose we have an $n \times m$ coefficeint matrix

\[A = \begin{bmatrix} a_{11} & a_{12} & \cdots & a_{1m} \\ a_{21} & a_{22} & \cdots & a_{2m} \\ \vdots & \vdots & \ddots & \vdots \\ a_{n1} & a_{n2} & \cdots & a_{nm} \end{bmatrix}\]$\text{rank}(A) \le n$

$\text{rank}(A) \le m$

Number of free variables = $m - \text{rank}(A)$

If a linear system with coefficient matrix $A$ has:

- exactly one solution, then we have no free variables. Therefore the $\text{rank}(A) = m$.

- no solutions, then ret augmented matrix $\begin{bmatrix} 0 & 0 & 0 & \big| & b \end{bmatrix} $ where $b\neq 0$. Therefore $\text{rank(A)} < n$.

- infinitely many solutions, then at least one free variable $\text{rank(A)} < m$.

Square Matricies: When a linear system has an $n \times n$ coefficient matrix $A$, there is exactly one soltuion…

if and only if $\text{rank}(A) = n$

if and only if $\text{rref}(A) = I_n$ (the $n \times n$ identity)

Matrix Algebra

Suppose $A = [a_{ij}]$ and $B = [b_{ij}]$ are both $n \times m$ and $c$ is in $\mathbb{R}$.

Matrix Sum: $A+B = [a_{ij} + b_{ij}]$ (add/scalar multiply entry by entry)

Scaler Multiplication: $cA = [ca_{ij}]$

Example

\[\begin{bmatrix}

2 & 3 \\

5 & -2 \\

-1 & 0

\end{bmatrix}

+

\begin{bmatrix}

-1 & 6 \\

3 & 0 \\

0 & 2

\end{bmatrix}

=

\begin{bmatrix}

1 & 9 \\

8 & -2 \\

-1 & 2

\end{bmatrix}\]

Example

\[5

\begin{bmatrix}

2 & 3 & -1 \\

1 & 3 & -3

\end{bmatrix}

=

\begin{bmatrix}

10 & 15 & -5 \\

5 & 15 & -15

\end{bmatrix}\]

Example

Vector Sum and Scaler multiplication

\[\vec{v} = \begin{bmatrix} 4\\ 3 \\ 1 \end{bmatrix}\] \[\vec{w} = \begin{bmatrix} 0 \\ 1 \\ -1 \end{bmatrix}\] \[\vec{v} + \vec{w} = \begin{bmatrix} 4 \\ 4 \\ 0 \end{bmatrix}\]What about matrix/vector products?

- Dot product for 2 vectors in $\mathbb{R}^n$

- $A \vec{x}$ matrix times vector

Definition:

For vectors $\vec{v} = \begin{bmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{bmatrix} $ and $\vec{w} = \begin{bmatrix} w_1 \\ w_2 \\ \vdots \\ w_n \end{bmatrix} $ in $\mathbb{R}^n$, the dot product $\vec{v} * \vec{w}$ is scaler:

$\vec{v} * \vec{w} = v_1 w_1 + v_2 w_2 + v_3 w_3 … = \sum_{k=1}^{n} v_k w_k$

Note: dot product does not distinguish between row vectors and column vectors.

Example

\[\begin{bmatrix}

5 \\

2 \\

-3

\end{bmatrix}

*

\begin{bmatrix}

1 \\

-1 \\

-1

\end{bmatrix}

= 5 * 1 + 2(-1) + (-3)(-1) = 5 +2 + 3 = 6\]

An important way to think about dot product:

\[\begin{bmatrix} 5 & 2 & -3 \end{bmatrix} \begin{bmatrix} 1 \\ -1 \\ -1 \end{bmatrix}\]The product $A\vec{x}$ : Suppose $A$ is $n\times m$ and $\vec{x} = \begin{bmatrix} x_1 \\ x_2 \\ \vdots \\ x_m \end{bmatrix} $

Size: $\left( n\times m \right) \left( m \times 1\right) \to n \times 1$

Way 1: Row Viewport

\[A = \begin{bmatrix} -- \vec{w_1} -- \\ -- \vec{w_2} -- \\ \vdots \\ -- \vec{w_n} -- \\ \end{bmatrix}\]Note: $\vec{w}_i \in \mathbb{R}^m$

\[A\vec{x} = \begin{bmatrix} \vec{w_1} * \vec{x} \\ \vec{w_2} * \vec{x} \\ \vdots \\ \vec{w_n} * \vec{x} \end{bmatrix}\](Size $n \times 1$)

Way 2: Column Viewport

\[A = \begin{bmatrix} | & | & & | \\ \vec{v_1} & \vec{v_2} & \cdots & \vec{v_m} \\ | & | & & | \\ \end{bmatrix}\]$\vec{v_j} \in \mathbb{R}^n$

$A \vec{x} = x_1 \vec{v_1} + x_2 \vec{v_2} + \cdots + x_m \vec{v_m}$

(Size $n \times 1$)

Example

\[\begin{bmatrix}

5 & -1 & 2 & 6 \\

4 & 3 & 0 & 1 \\

-1 & 0 & 2 & -1

\end{bmatrix}

\begin{bmatrix}

0 \\

2 \\

-1 \\

3

\end{bmatrix}

=\]

\[0

\begin{bmatrix}

5 \\

4 \\

-1

\end{bmatrix}

+

2 \begin{bmatrix}

-1 \\

3 \\

0

\end{bmatrix}

- 1

\begin{bmatrix}

2 \\

0 \\

2

\end{bmatrix}

+ 3

\begin{bmatrix}

6 \\

1 \\

-1

\end{bmatrix}

=

\begin{bmatrix}

14 \\

9 \\

-5

\end{bmatrix}\]

Example

\[\begin{bmatrix}

5 & -1 & 2 & 6 \\

4 & 3 & 0 & 1 \\

-1 & 0 & 2 & -1

\end{bmatrix}

\begin{bmatrix}

2 \\

3 \\

2

\end{bmatrix}\]

Product is not defined

Example

\[\begin{bmatrix}

5 & -2 \\

3 & 1 \\

1 & 4 \\

-1 & 0 \\

0 & 6

\end{bmatrix}

\begin{bmatrix}

2 \\

-1

\end{bmatrix}

=

\begin{bmatrix}

10 + 2 \\

6 - 1 \\

2 - 4 \\

-2 + 0 \\

0 - 6

\end{bmatrix}

=

\begin{bmatrix}

12 \\

5 \\

-2 \\

-2 \\

-6

\end{bmatrix}\]

Definition:

A vector $\vec{b}$ in $\mathbb{R}^n$ is a linear combination of $\vec{v_1},\ \vec{v_2},\ \cdots,\ \vec{v_m}$ in $\mathbb{R}^n$ provided there exists scalars $x_1,\ x_2,\ x_3,\ \cdots ,\ x_m$ with $\vec{b} = x_1 \vec{v_1} + x_2 \vec{v_2} + x_3 \vec{v_3} + \cdots + x_m \vec{v_m}$.

Example

$\begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix} $ is a linear combination of $\begin{bmatrix} 0 \\ 2 \\ 0 \\ -1 \end{bmatrix}$ and $\begin{bmatrix} 2 \\ 0 \\ 1 \\ 1 \end{bmatrix}$

\[\begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix} = 5 \begin{bmatrix} 0 \\ 2 \\ 0 \\ -1 \end{bmatrix} + 2 \begin{bmatrix} 2 \\ 0 \\ 1 \\ 1 \end{bmatrix}\]

Example

$\begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix} $ is a linear combination of $\vec{e_1} = \begin{bmatrix} 1 \\ 0 \\ 0 \\ 0 \end{bmatrix} $, $\vec{e_2} = \begin{bmatrix} 0 \\ 1 \\ 0 \\ 0 \end{bmatrix} $, $\vec{e_3} = \begin{bmatrix} 0 \\ 0 \\ 1 \\ 0 \end{bmatrix}$, and $\vec{e_4} = \begin{bmatrix} 0 \\ 0 \\ 0 \\ 1 \end{bmatrix}$.

In $\mathbb{R}^n$ vector, for $1 \le i \le n$ : $\vec{e_i}$ has 1 in $i$th spot and 0 elsewhere.

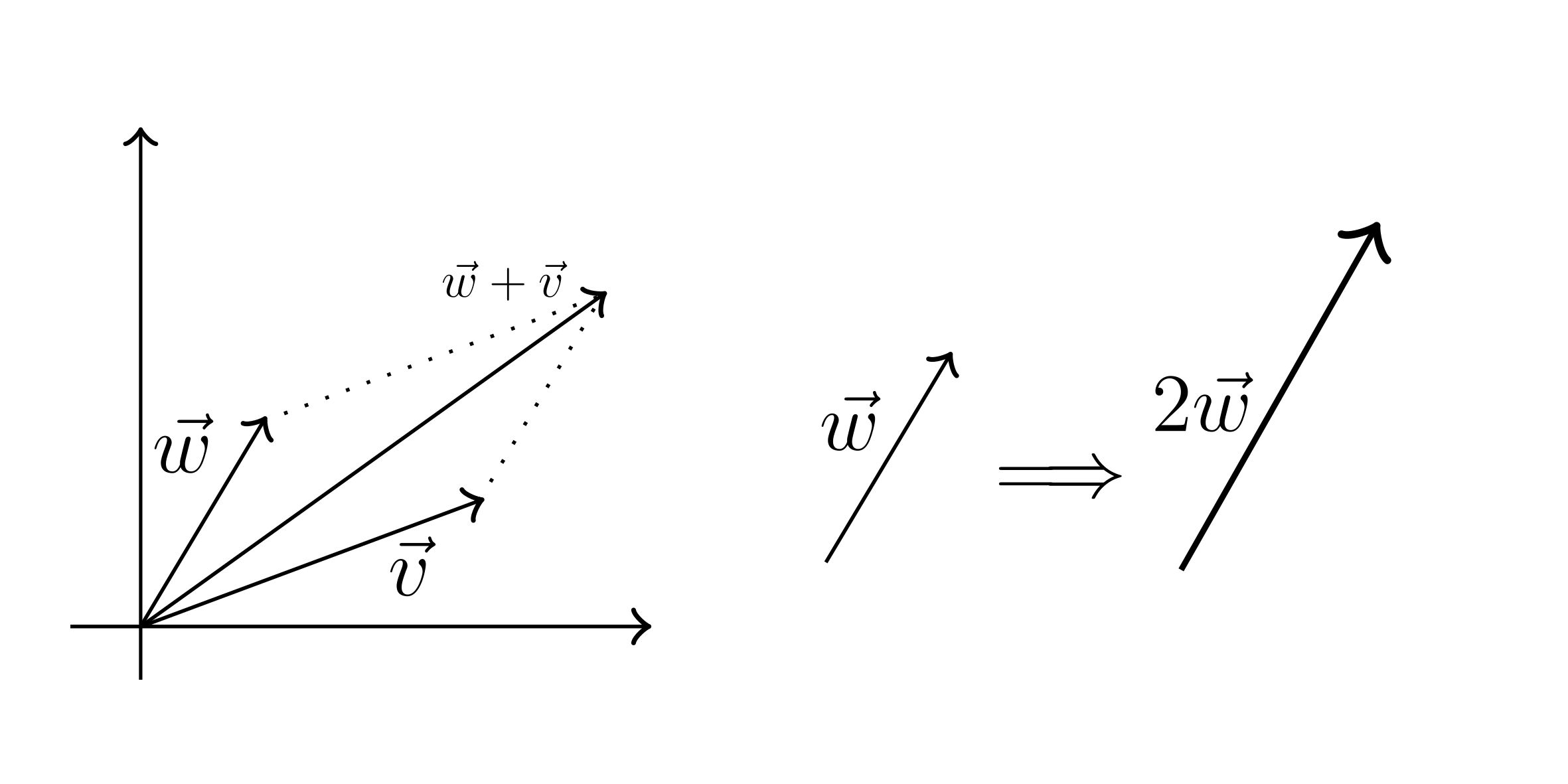

\[\begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix} = 4 \vec{e_1} + 10 \vec{e_2} + 2 \vec{e_3} - 3 \vec{e_4}\]Adding vectors with parallelogram rule

Example

$\begin{bmatrix} 0 \\ 0 \\ 1 \end{bmatrix}$ in $\mathbb{R}^3$ is not linear combination of $\vec{e_1}$ and $\vec{e_2}$. Linear combinations of $\vec{e_1}$ and $\vec{e_2}$ just fill out the xy-plane. It cannot traverse the z-axis.

Example

Let $\vec{b} = \begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix}$. Is $\vec{b}$ a linear combination of $\vec{v} = \begin{bmatrix} 4 \\ 2 \\ 1 \\ -1 \end{bmatrix}$ and $\vec{w} = \begin{bmatrix} 2 \\ -1 \\ 1 \\ 1 \end{bmatrix}$

What we want: scalars $x_1$, $x_2$ with:

\[x_1 \begin{bmatrix} 4 \\ 2 \\ 1 \\ -1 \end{bmatrix} + x_2 \begin{bmatrix} 2 \\ -1 \\ 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix}\](We will finish this next lecture)

Quiz 1 Preparation

Example

Solve the linear system by elementary row operations.

\[\begin{bmatrix} 1 & 6 & 2 & -5 & \big| & 3 \\ 0 & 0 & 2 & -8 & \big| & 2 \\ 1 & 6 & 1 & -1 & \big| & 2 \end{bmatrix} \overset{-R_1 + R_2}{\implies} \begin{bmatrix} 1 & 6 & 2 & -5 & \big| & 3 \\ 0 & 0 & 2 & -8 & \big| & 2 \\ 0 & 0 & -1 & -4 & \big| & 2 \end{bmatrix}\] \[\overset{\frac{1}{2} R_2}{\implies} \begin{bmatrix} 1 & 6 & 2 & -5 & \big| & 3 \\ 0 & 0 & 1 & -4 & \big| & 1 \\ 0 & 0 & -1 & -4 & \big| & 2 \end{bmatrix} \overset{R_2 + R_3}{\implies} \begin{bmatrix} 1 & 6 & 2 & -5 & \big| & 3 \\ 0 & 0 & 1 & -4 & \big| & 1 \\ 0 & 0 & 0& 0 & \big| & 2 \end{bmatrix}\] \[\overset{-R_1 + R_1}{\implies} \begin{bmatrix} 1 & 6 & 0 & 3 & \big| & 1 \\ 0 & 0 & 1 & -4 & \big| & 1 \\ 0 & 0 & 0& 0 & \big| & 2 \end{bmatrix}\]$x_2 = 5$

$x_4 = 5$

$x_1 = 1 - 6s - 3t$

$x_3 = 1 + 4t$

\[\begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \end{bmatrix} = \begin{bmatrix} 1-6s-3t \\ s \\ 1+4t \\ t \end{bmatrix}\]

Example

Find all polynomails of the form $f(t) = a + bt + ct^2$ with the point (1, 6) on the graph of $f$ such that $f’(2) = 9$ and $f’‘(8) = 4$.

$f’(t) = b + 2ct$

$f’‘(t) = 2c$

$f(1) = 6 \to a + b + c = 6$

$f’(2) = 9 \to b + 4c = 9$

$f’‘(8) = 4 \to 2c = 4$

$c = 2$

$b + 2 = 9 \implies b = 1$

$a + 1 + 2 = 6 \implies a=3$

$f(t) = 3 + t + 2t^2$

Example

Find one value $c$ so that the agumented matrix below corresponds to an inconsistent linear system.

\[\begin{bmatrix} 1 & 2 & -1 & \big| & 3 \\ 2 & 4 & -2 & \big| & c \end{bmatrix}\]Note that in order for an inconsistent linear system, you need the form: $\begin{bmatrix} 0 & 0 & 0 & \big| & b \end{bmatrix} $

\[\begin{bmatrix} 1 & 2 & -1 & \big| & 3 \\ 2 & 4 & -2 & \big| & c \end{bmatrix} \overset{2R_1 - R_2}{\implies} \begin{bmatrix} 1 & 2 & -1 & \big| & 3 \\ 0 & 0 & 0 & \big| & 6 - c \end{bmatrix}\]So when $c \neq 6$.

Example

Consider the matriceis $A$, $B$, $C$, $D$ below.

\[A = \begin{bmatrix} 1 & 3 & 0 & -1 & 5 \\ 0 & 1 & 0 & 9 & 0 \\ 0 & 0 & 0 & 0 & 0 \\ 0 & 0 & 1 & 1 & 4 \\ \end{bmatrix}\] \[B = \begin{bmatrix} 0 & 1 & 6 & 0 & 3 & -1 \\ 0 & 0 & 0 & 1 & 2 & 2 \\ 0 & 0 & 0 & 0 & 0 & 0 \end{bmatrix}\] \[C = \begin{bmatrix} 0 & 1 & 0 & 2 & 4 \end{bmatrix}\] \[D = \begin{bmatrix} 0 \\ 1 \\ 0 \\ 2 \\ 4 \end{bmatrix}\]a) Which of the matrices are in reduced row-echelon form (rref)?

Solution

B, C

b) List the rank of each matrix

Solution

rank($A$) = 3

rank($B$) = 2

rank($C$) = rank($D$) = 1

A linear system is consistent if and only if rank of coefficient matrix equals tank of augmented matrix. For example, this would change the rank:

\[\begin{bmatrix} \vdots & \big\| & \vdots \\ 0 & \big\| & 1 \end{bmatrix}\]Recall

$A \vec{x}$ for $A$ an $n \times m$ matrix and $\vec{x} = \begin{bmatrix} x_1 \\ \vdots \\ x_m \end{bmatrix} $

Row Viewport:

Suppose $\vec{w_1}, \vec{w_2}, \cdots, \vec{w_n}$ in $\mathbb{R}^m$ are the rows of $A$, then:

\[A\vec{x} = \begin{bmatrix} - & \vec{w_1} * \vec{x} & - \\ - & \vec{w_2} * \vec{x} & - \\ & \vdots & \\ - & \vec{w_m} * \vec{x} & - \\ \end{bmatrix}\]ith entry of $A \vec{x}$ is [Row i of $A$] $\cdot \vec{x}$

Column Viewport:

Suppose $\vec{v_1},\ \vec{v_2},\ \cdots ,\ \vec{v_m}$ in $\mathbb{R}^n$ are ithe columns of $A$, i.e. $A = \begin{bmatrix} | & | && | \\ \vec{v_1} & \vec{v_2} & \cdots & \vec{v_m} \\ | & | && | \end{bmatrix} $

Then, $A \vec{x} = x_1 \vec{v_1} + x_2 \vec{v_2} + \cdots + x_m \vec{v_m}$

Properties of the product $A\vec{x}$: Suppose $A$ is $n\times m$, $\vec{x}$, $\vec{y}$ are in $\mathbb{R}^m$ and $k$ is a scalar

- $A(\vec{x} + \vec{y}) = A\vec{x} + A\vec{y}$

- $A(k\vec{x}) = kA\vec{x}$

Justification of 2:

$k\vec{x} = \begin{bmatrix} kx_1 \\ kx_2 \\ \vdots \\ kx_m \end{bmatrix}$

$A(k\vec{x}) = (kx_1) \vec{v_1} + (kx_2)\vec{v_2} + \cdots + (kx_m) \vec{v_m}$

$= k(x_1 \vec{v_1} + x_2 \vec{v_2} + \cdots + x_m \vec{v_m})$

$= kA\vec{x}$

We continue with this question: is $\begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix}$ a linear combination of $\begin{bmatrix} 4 \\ 2 \\ 1 \\ -1 \end{bmatrix}$ and $\begin{bmatrix} 2 \\ -1 \\ 1 \\ 1 \end{bmatrix}$?

Can we find $x_1$, $x_2$ scalars such that $x_1 \begin{bmatrix} 4 \\ 2 \\ 1 \\ -1 \end{bmatrix} + x_2 \begin{bmatrix} 2 \\ -1 \\ 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix}$?

Is there a solution to the linear system $\begin{bmatrix} 4 & 2 & \big| & 4 \\ 2 & -1 & \big| & 10 \\ 1 & 2 & \big| & 2 \\ -1 & 1 & \big| & -3 \end{bmatrix}$?

\[\begin{bmatrix} 4 & 2 & \big\| & 4 \\ 2 & -1 & \big\| & 10 \\ 1 & 1 & \big\| & 2 \\ -1 & 1 & \big\| & -3 \end{bmatrix} \overset{R_1 \leftrightarrow R_3}{\implies} \begin{bmatrix} 1 & 1 & \big\| & 2 \\ 2 & -1 & \big\| & 10 \\ 4 & 2 & \big\| & 4 \\ -1 & 1 & \big\| & -3 \end{bmatrix}\] \[\implies \begin{bmatrix} 1 & 1 & \big\| & 2 \\ 0 & -3 & \big\| & 6 \\ 0 & -2 & \big\| & -4 \\ 0 & 2 & \big\| & -1 \end{bmatrix} \implies \begin{bmatrix} 1 & 1 & \big\| & 2 \\ 0 & 1 & \big\| & -2 \\ 0 & 0 & \big\| & -8 \\ 0 & 0 & \big\| & 3 \end{bmatrix}\]This linear system is inconsistent so: No, there is no solution.

We see

\[\begin{bmatrix} 4 & 2 & \big\| & 4 \\ 2 & -1 & \big\| & 10 \\ 1 & 1 & \big\| & 2 \\ -1 & 1 & \big\| & -3 \\ \end{bmatrix} \leftrightarrow \begin{bmatrix} 4 & 2 \\ 2 & -1 \\ 1 & 1 \\ -1 & 1 \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} 4 \\ 10 \\ 2 \\ -3 \end{bmatrix}\]This correspondence works generally:

- A linear system with augmented matrix $\begin{bmatrix} A & \big| & \vec{b} \end{bmatrix}$ can be written in matrix form as $A\vec{x} = \vec{b}$.

Moreover, this system is consistent if and only if $\vec{b}$ is a linear combination of the columns of $A$. (More in sections 3.1-3.3, 5.4)

2.1 Introduction to Linear Transformation

Recall that a function $f : \mathbb{R}^m \to \mathbb{R}^n$ is a rule that assigns to each vector in $\mathbb{R}^m$ a unique vector in $\mathbb{R}^n$.

- Domain: $\mathbb{R}^m$

- Codomain/target space: $\mathbb{R}^n$

- Image/range: $\{ f(\vec{x}) : x \in \mathbb{R}^m \}$

Example

$f : \mathbb{R}^3 \to \mathbb{R}$ given by $f \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \sqrt{x_1^2 + x_2^2 + x_3^3}$

This is given the length of the vector.

Domain: $\mathbb{R}^3$

Range: $[0, \infty)$

Definition:

A function $T : \mathbb{R}^m \to \mathbb{R}^n$ is a linear transformation provided there exists an $n \times m$ matrix $A$ such that $T(\vec{x}) = A\vec{x}$ for all $\vec{x} \in \mathbb{R}^m$.

Comments:

- “Linear functions” in calculus 1/2/3: graph is a line/plane/3-space

Examples:

$f(x) = 5x + 4$

$f(x,\ y) = 2x - 3y + 8$

But not all of these are linear transformations. These should be called affine.

- For any $n\times m$ matrix $A$, $A\vec{0} = \vec{0}$ : For any linear transformation $T: T(\vec{0}) = \vec{0}$.

Example

For scalars, $a$, $b$, $c$, the function $g(x,\ y,\ z) = ax + by + cz$ is a linear transformation.

$g : \mathbb{R}^3 \to \mathbb{R}$

$g \begin{bmatrix} x \\ y \\ z \end{bmatrix} = \begin{bmatrix} a & b & c \end{bmatrix} \begin{bmatrix} x \\ y \\ z \end{bmatrix}$

The matrix of $g$ is: $\begin{bmatrix} a & b & c \end{bmatrix}$

Example

The function $f(x) = \begin{bmatrix} a \\ 5 \\ -x \end{bmatrix}$ is not a linear transformation.

$f : \mathbb{R} \to \mathbb{R}^3$

$f(0) = \begin{bmatrix} 0 \\ 5 \\ 0 \end{bmatrix} \neq \vec{0}$

Therefore $f$ is not a linear transformation.

Question: What is the linear transformation corresponding to $I_3 = \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}$?

\[\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix} \begin{bmatrix} x \\ y \\ z \end{bmatrix} = x \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix} + y \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} + z \begin{bmatrix} 0 \\ 0 \\ 1 \end{bmatrix} = \begin{bmatrix} x \\ y \\ z \end{bmatrix}\]Answer: Identity map. It maps any matrix to itself.

Consider $T(\vec{x}) = A\vec{x}$ where $A = \begin{bmatrix} 5 & 1 & 3 \\ 4 & -1 & 6 \\ 2 & 0 & 7 \\ 3 & 2 & 5 \end{bmatrix}$. Find $T(\vec{e_1})$, $T(\vec{e_2})$, and $T(\vec{e_3})$.

Recall that $\vec{e_1} = \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix}$, $\vec{e_2} = \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix}$, $\vec{e_3} = \begin{bmatrix} 0 \\ 0 \\ 1 \end{bmatrix}$

Note: $A$ is $4\times 3$. $T : \mathbb{R}^3 \to \mathbb{R}^4$

\[T(\vec{e_1}) = \begin{bmatrix} 5 \\ 4 \\ 2 \\ 3 \end{bmatrix}\] \[T(\vec{e_2}) = \begin{bmatrix} 1 \\ -1 \\ 0 \\ 2 \end{bmatrix}\] \[T(\vec{e_3}) = \begin{bmatrix} 3 \\ 6 \\ 7 \\ 5 \end{bmatrix}\]Suppose $T : \mathbb{R}^m \to \mathbb{R}^n$ is a linear transformation.

The matrix of $T$ is

\[\begin{bmatrix} | & | & & | \\ T(\vec{e_1}) & T(\vec{e_2}) & \cdots & T(\vec{e_m}) \\ | & | & & | \\ \end{bmatrix}\]Where $\vec{e_1},\ \vec{e_2},\ \cdots ,\ \vec{e_m}$ standard vectors in $\mathbb{R}^m$. e.i.: 1’s in the ith spot, 0’s elsewhere

Example

Find the matrix of the transformation $T : \mathbb{R}^4 \to \mathbb{R}^2$ given by $T \begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \end{bmatrix} = \begin{bmatrix} x_4 \\ 2x_2 \end{bmatrix}$.

$T(\vec{e_1}) = \begin{bmatrix} 0 \\ 0 \end{bmatrix} $

$T(\vec{e_2}) = \begin{bmatrix} 0 \\ 2 \end{bmatrix}$

$T(\vec{e_3}) = \begin{bmatrix} 0 \\ 0 \end{bmatrix}$

$T(\vec{e_4}) = \begin{bmatrix} 1 \\ 0 \end{bmatrix}$

$A = \begin{bmatrix} 0 & 0 & 0 & 1 \\ 0 & 2 & 0 & 0 \end{bmatrix}$

Check:

\[\begin{bmatrix} 0 & 0 & 0 & 1 \\ 0 & 2 & 0 & 0 \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \\ x_3 \\ x_4 \end{bmatrix} = \begin{bmatrix} x_4 \\ 2x_2 \end{bmatrix}\]

Example

Find the matrix of this transformation from $\mathbb{R}^2$ to $\mathbb{R}^4$ given by $\begin{vmatrix} y_1 = 9x_1 + 3x_2 \\ y_2 = 2x_1 - 9x_2 \\ y_3 = 4x_1 - 9x_2 \\ y_4 = 5x_1 + x_2 \end{vmatrix}$.

$\vec{e_1} = T\left( \begin{bmatrix} 1 \\ 0 \end{bmatrix} \right) = \begin{bmatrix} 9 \\ 2 \\ 4 \\5 \end{bmatrix}$

$\vec{e_1} = T \left( \begin{bmatrix} 0 \\ 1 \end{bmatrix} \right) = \begin{bmatrix} 3 \\ -9 \\ -9 \\ 1 \end{bmatrix}$

\[A = \begin{bmatrix} 9 & 3 \\ 2 & -9 \\ 4 & -9 \\ 5 & 1 \end{bmatrix}\]Theorem:

A function $T : \mathbb{R}^m \to \mathbb{R}^n$ is a linear transformation if and only if $T$ satisfies:

- $T(\vec{v} + \vec{w}) = T(\vec{v}) + T(\vec{w})$ for all $\vec{v}$, $\vec{w}$ in $\mathbb{R}^n$

- $T(k\vec{v}) = kT(\vec{v})$ for all $\vec{v}$ in $\mathbb{R}^n$ and scalars $k$.

Proof:

If $T : \mathbb{R}^m \to \mathbb{R}^n$ is a linear transformation, there is an $n \times m$ matrix $A$ with $T(\vec{x}) = A\vec{x}$. (1) and (2) hold from matrix properties.

Assume $T : \mathbb{R}^m \to \mathbb{R}^m$ satisfies (1) and (2). Find matrix $A$ with $T(\vec{x}) = A\vec{x}$ for all $\vec{x}$ in $\mathbb{R}^m$.

Let $A = \begin{bmatrix} | & | & & | \\ T(\vec{e_1}) & T(\vec{e_2}) & \cdots & T(\vec{e_m}) \\ | & | & & | \end{bmatrix} $. Let $\vec{x} = \begin{bmatrix} x_1 \\ \vdots \\ x_m \end{bmatrix}$

$A \vec{x} = x_1 T(\vec{e_1}) + x_2 T(\vec{e_2}) + \cdots + x_m T(\vec{e_m})$

$A \vec{x} = T(x_1 \vec{e_1}) + T (x_2 \vec{e_2}) + \cdots + T (x_m \vec{e_m})$ (property 2)

$A \vec{x} = T(x_1 \vec{e_1} + x_2 \vec{e_2} + \cdots + x_m \vec{e_m})$ (property 1)

$A \vec{x} = T(\vec{x})$ as $\vec{x} = x_1 \vec{e_1} + x_2 \vec{e_2} + \cdots + x_m \vec{e_m}$

Example

Sow the transformation $T : \mathbb{R}^2 \to \mathbb{R}^2$ is not linear, where $T$ is given by:

$y_1 = x_1^2$

$y_2 = x_1 + x_2$

\[f \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \begin{bmatrix} x_1^2 \\ x_1 + x_2 \end{bmatrix}\] \[f \begin{bmatrix} 1 \\ 1 \end{bmatrix} = \begin{bmatrix} 1^2 \\ 1 + 1 \end{bmatrix} = \begin{bmatrix} 1 \\ 2 \end{bmatrix}\] \[f \begin{bmatrix} -1 \\ -1 \end{bmatrix} = \begin{bmatrix} (-1)^2 \\ -1 -1 \end{bmatrix} = \begin{bmatrix} 1 \\ -2 \end{bmatrix} \neq - \begin{bmatrix} 1 \\ 2 \end{bmatrix}\]More generally:

\[T \left( -\begin{bmatrix} 1 \\ 1 \end{bmatrix} \right) \neq - T \left( \begin{bmatrix} 1 \\ 1 \end{bmatrix} \right)\]This fails property 2. Therefore, this is not a linear transformation.

Example

Recall the function $f : \mathbb{R}^3 \to \mathbb{R}$ given by $f \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \sqrt{x_1^2 + x_2^2 + x_3^2}$. Show that $f$ is not a linear a transformation.

$f \begin{bmatrix} -1 \\ 0 \\ 0 \end{bmatrix} = \sqrt{\left( -1 \right) ^{2} + 0 + 0} = 1$

$-1 f \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix} = -1 \sqrt{1 + 0 + 0} =-1$

$f(-\vec{e_1}) \neq -f (\vec{e_1})$ (fails property 2)

or

$f(\vec{e_1}) = 1$

$f(\vec{e_2}) = 1$

$f(\vec{e_1} + \vec{e_2}) = f \begin{bmatrix} 1 \\ 1 \\ 0 \end{bmatrix} = \sqrt{1 + 1 + 0} = \sqrt{2}$

$f(\vec{e_1} + \vec{e_2}) \neq f(\vec{e_1}) + f(\vec{e_2})$ (fails property 1)

2.2 Linear Transformations in Geometry

Suppose $T : \mathbb{R}^2 \to \mathbb{R}^2$ is a linear transformation. Geometrically, we will discuss:

- Orthogonal projection

- Reflection

- Scaling

- Rotation

- Horizontal or vertical shear

Background material (Geometry): See Appendix A in textbook

- $\mid \mid \vec{v} \mid \mid$ length (magnitude, norm) of $\vec{v}$ in $\mathbb{R}^n$

$ \mid \mid \vec{v} \mid \mid = \sqrt{\vec{v} * \vec{v}} = \sqrt{v_1^2 + v_2^2 + \cdots + v_n^2}$

$\vec{v} = \begin{bmatrix} v_1 \\ v_2 \\ \vdots \\ v_n \end{bmatrix} $

-

If $c$ is a scalar and $\vec{v} \in \mathbb{R}^n,\ \mid \mid c \vec{v} \mid \mid = \mid c \mid \mid \mid \vec{v} \mid \mid$. Here $ \mid c \mid $ is absolute value of $c$.

-

A vector $\vec{u} \in \mathbb{R}^n$ is a unit vector provided.

$ \mid \mid \vec{u} \mid \mid =1$

Example: $\vec{e_1},\ \vec{e_2}, \cdots ,\ \vec{e_n}$ all unit

- Two vectors $\vec{v},\ \vec{w}$ in $\mathbb{R}^n$ are orthogonal (perpendicular, normal) provided

$\vec{v} * \vec{w} = 0$ (angle between $\vec{v}$ and $\vec{w}$ is right)

- Two nonzero vectors $\vec{v}$, $\vec{w}$ in $\mathbb{R}^n$ are parallel provided they are scaler multiples of each other

Example

Let $\vec{v} = \begin{bmatrix} 6 \\ -2 \\ -1 \end{bmatrix}$ and $\vec{w} = \begin{bmatrix} 2 \\ 5 \\2 \end{bmatrix} $

1) Show $\vec{v}$ and $\vec{w}$ are perpendicular

$\vec{v} \cdot \vec{w} = 6(2) + (-2)(5) + (-1)(2) = 0$

2) Find two unit vectors parallel to $\vec{w}$

$\mid \mid \vec{w} \mid \mid = \sqrt{2^{2} + 5^{2} + 2^{2}} = \sqrt{4 + 25 + 4} = \sqrt{33}$

$\frac{\vec{w}}{ \mid \mid \vec{w} \mid \mid } = \frac{1}{\sqrt{33}} \begin{bmatrix} 2 \\ 5 \\ 2 \end{bmatrix}$ (the length of unit vectors must be 1)

Sometimes this is called the normalization of $\vec{w}$ or the direction of $\vec{w}$.

and $\frac{-1}{\sqrt{33}} \begin{bmatrix} 2 \\ 5 \\ 2 \end{bmatrix}$

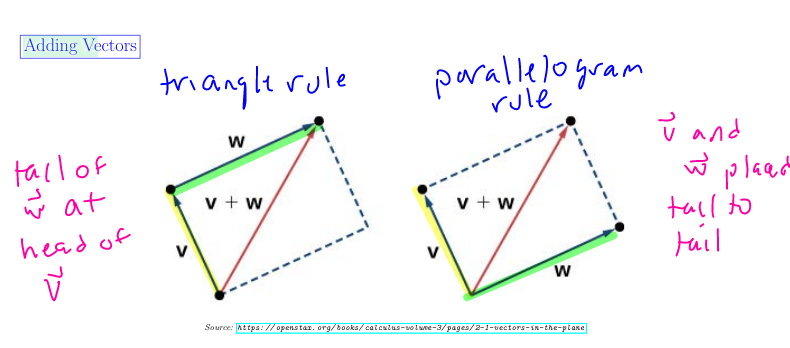

Adding Vectors (triangle rule and parallelogram rule):

Properties of the Dot Product

Consider $\vec{u}, \vec{v}, \vec{w} \in \mathbb{R}^{n}$ and $k$ scalar.

- $\vec{v} \cdot \vec{w} = \vec{w} \cdot \vec{v}$

- $k\left( \vec{v} \cdot \vec{w} \right) = \left( k \vec{v} \right) \cdot \vec{w} = \vec{v} \cdot \left( k \vec{w} \right)$

- $\vec{u} \cdot \left( \vec{v} + \vec{w} \right) = \vec{u} \cdot \vec{v} + \vec{u} \cdot \vec{w}$

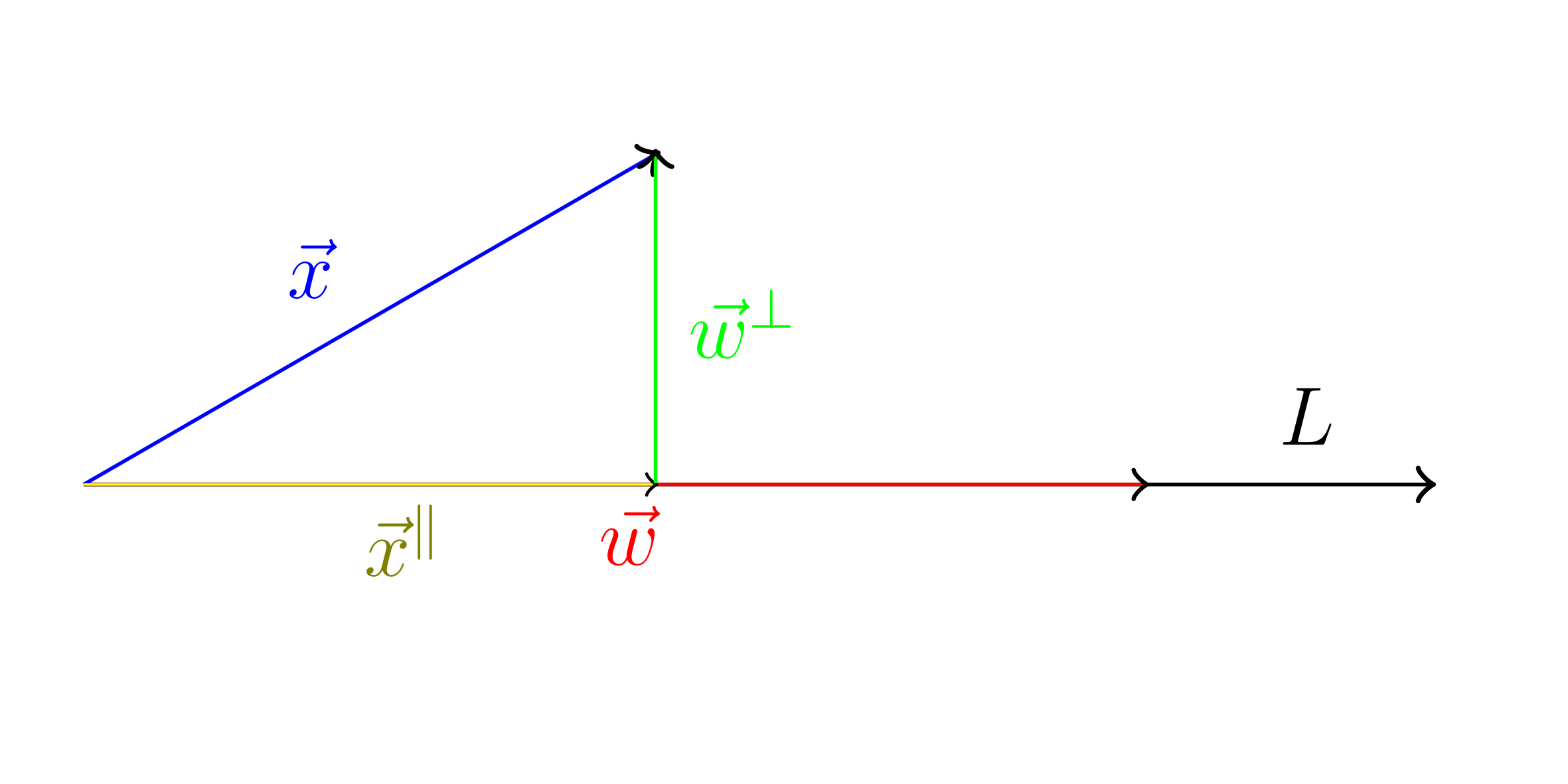

Orthogonal Projection onto a line $L$

Suppose $L$ is a line in $\mathbb{R}^{n}$ and $\vec{w}$ is a nonzero vector with $L = \text{space}\{\vec{w}\}$.

spanmeans all multiples of $\vec{w}$

Given $\vec{x}$ in $\mathbb{R}^{n}$, we may write $\vec{x} = \vec{x^{\parallel}} + \vec{x^{\bot}}$

$\vec{x}^{\parallel} = \text{proj}_{L} \vec{x}$:

This is the orthogonal projection of $\vec{x}$ onto $L$. Component of $\vec{x}$ parallel to $L$.

$\vec{x}^{\bot} = \vec{x} - \vec{x}^{\parallel}$:

This is the component of $\vec{x}$ perpendicular to $L$

We want: $\vec{x}^{\parallel} = k \vec{w}$. Find $k$. We also want:

- $\vec{x}^{\bot} \cdot \vec{w} = 0$

$\left( \vec{x} - \vec{x}^{\parallel} \right) \cdot \vec{w} = 0$

$\left( \vec{x} - k \vec{w}\right) \cdot \vec{w} = 0$

$\vec{x} \cdot \vec{w} - k \left( \vec{w} \cdot \vec{w} \right) = 0$

$\vec{x} \cdot \vec{w} = k \left( \vec{w} \cdot \vec{w} \right)$

$k = \frac{\vec{x} \cdot \vec{w}}{\vec{w} \cdot \vec{w}}$

The definition of a projection onto a line:

$\text{proj}_{L} \vec{x} = \frac{\vec{x} \cdot \vec{w}}{\vec{w} \cdot \vec{w}} \vec{w}$

Example

Let $L$ be the line in $\mathbb{R}^{3}$ spanned by $\vec{w} = \begin{bmatrix} 1 \\ 0 \\ -2 \end{bmatrix}$.

Find the orthogonal projection of $\vec{x} = \begin{bmatrix} 2 \\ 1 \\ -1 \end{bmatrix}$ onto $L$ and decompose $\vec{x}$ into $\vec{x}^{\parallel}$ into $\vec{x}^{\parallel} + \vec{x}^{\bot}$.

$\vec{x} \cdot \vec{w} = 2(1) + 0 + (-2)(-1) = 4$

$\vec{w} \cdot \vec{w} = 1(1) + 0(-2)(-2) = 5$

$\vec{x}^{\parallel} = \text{proj}_{L} \vec{x} = \left( \frac{\vec{x} \cdot \vec{w}}{\vec{w} \cdot \vec{w}} \right) \vec{w}$

$\vec{x}^{\parallel} = \frac{4}{5} \begin{bmatrix} 1 \\ 0 \\ 2 \end{bmatrix} = \begin{bmatrix} \frac{4}{5} \\ 0 \\ -\frac{8}{5} \end{bmatrix}$

$\vec{x}^{\bot} = \vec{x} - \vec{x}^{\parallel} = \begin{bmatrix} 2 \\ 1 \\ -1 \end{bmatrix} - \begin{bmatrix} \frac{4}{5} \\ 0 \\ -\frac{8}{5} \end{bmatrix} = \begin{bmatrix} \frac{6}{5} \\ 1 \\ \frac{3}{5} \end{bmatrix}$

Check:

$\vec{x}^{\bot} \cdot \vec{w} = 0 = \begin{bmatrix} \frac{6}{5} \\ 1 \\ \frac{3}{5} \end{bmatrix} \cdot \begin{bmatrix} 1 \\ 0 \\ -2 \end{bmatrix} = \frac{6}{5} - \frac{6}{5} = 0$

Linear transformations $T : \mathbb{R}^{2} \to \mathbb{R}^{2}$ and geometry:

Suppose $\vec{w} = \begin{bmatrix} w_1 \\ w_2 \end{bmatrix}$ is a nonzero vector in $\mathbb{R}^{n}$ and $L = \text{span}\{\vec{w}\}$.

For $\vec{x}$ in $\mathbb{R}^{2}$, the map $\vec{x} \to \text{proj}_{L}\left( \vec{x} \right)$ is a linear transformation!

Let’s find the $2 \times 2$ matrix of orthogonal projection.

$\text{proj} _{L} \left( \vec{e} _{1} \right) = \left( \frac{\vec{e} _{1}\cdot \vec{w}}{\vec{w} \cdot \vec{w}} \right) \vec{w} = \frac{w _{1}}{w _{1}^{2} + w _{2}^{2}} \begin{bmatrix} w _1 \\ w _2 \end{bmatrix}$

$\text{proj} _{L} \left( \vec{e} _{2} \right) = \left( \frac{\vec{e} _{2}\cdot \vec{w}}{\vec{w} \cdot \vec{w}} \right) \vec{w} = \frac{w _{2}}{w _{1}^{2} + w _{2}^{2}} \begin{bmatrix} w _1 \\ w _2 \end{bmatrix}$

Matrix: $\frac{1}{w_1^{2} + w_2^{2}} \begin{bmatrix} w_1^{2} & w_1w_2 \\ w_1w_2 & w_2 ^{2} \end{bmatrix}$

Comment: if $w=\text{span} \{ \begin{bmatrix} u_1 \\ u_2 \end{bmatrix} \}$ where $\begin{bmatrix} u_{1} \\ u_2 \end{bmatrix}$ is unit. i.e. $u_1^{2} + u_2^{2} = 1$

Let’s verify $T$ is a linear transformation. Let $\begin{bmatrix} x_1 \\ x_2 \end{bmatrix}$. Show $\text{proj}_{L} \vec{x} = A \vec{x}$

$\frac{1}{w_1^{2} + w_2^{2}} \begin{bmatrix} w_1^{2} & w_1w_2 \\ w_1w_2 & w_2 ^{2} \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} = \frac{1}{w_1 ^{2} + w_2 ^{2}} \begin{bmatrix} w_1^{2}x_1 + w_1w_2x_2 \\ w_1w_2x_1 + w_2^{2}x_2 \end{bmatrix}$

$= \frac{1}{w_1^{2} + w_2^{2}} \begin{bmatrix} w_1 \left( w_1x_1 + w_2x_2 \right) \\ w_2 \left( w_1x_1 + w_2x_2 \right) \end{bmatrix} = \frac{\vec{w} \cdot \vec{x}}{\vec{v} \cdot \vec{w}} \begin{bmatrix} w_1 \\ w_2 \end{bmatrix}$

Example

Find the matrix of orthogonal projection onto the line spanned by $\vec{w} = \begin{bmatrix} -1 \\ 2 \end{bmatrix}$.

$\frac{1}{\left( -1 \right) ^{2} + 2^{2}} \begin{bmatrix} \left( -1 \right) ^{2} & -2 \\ -2 & 2^{2} \end{bmatrix} = \frac{1}{5} \begin{bmatrix} 1 & -2 \\ -2 & 4 \end{bmatrix} = \begin{bmatrix} \frac{1}{5} & -\frac{2}{5} \\ -\frac{2}{5} & \frac{4}{5} \end{bmatrix}$

Example

Find the matrix of orthogonal projection onto the line $y=x$.

$\text{span}\{ \begin{bmatrix} 1 \\ 1 \end{bmatrix} \}$

$\frac{1}{1^{2} + 1^{2}} \begin{bmatrix} 1^{1} & 1\cdot 1 \\ 1\cdot 1 & 1^{2} \end{bmatrix} = \begin{bmatrix} \frac{1}{2} & \frac{1}{2} \\ \frac{1}{2} & \frac{1}{2} \end{bmatrix}$

Reflection: Let $L = \text{span} \{ \vec{w} \}$ by a line in $\mathbb{R} ^2$.

We use $\vec{x}^{\bot} = \vec{x} - \text{proj}_L (\vec{x})$

$\text{ref} _{L} = \text{proj} _{L}\left( \vec{x} \right) - \vec{x}^{\bot}$

$= \text{proj} _{L} \left( \vec{x} \right) - \left( \vec{x} - \text{proj} _{L}\left( x \right) \right) $

$= 2 \text{proj}_{L} \left( \vec{x} \right) - \vec{x}$

The matrix of reflection about line $L$:

Two ways to compute:

1) Suppose $L = \text{span}\{ \begin{bmatrix} u_1 \\ u_2 \end{bmatrix} \}$, where $u_1 ^{2} + u_2 ^{2} = 1$

$\text{ref} _{L}\left( \vec{x} \right) = 2 \text{proj} _{L} \left( \vec{x} \right) - \vec{x} \to 2 \begin{bmatrix} u _1^{2} & u _1u _2 \\ u _1u _2 & u _2^{2} \end{bmatrix} - I _2 = \begin{bmatrix} 2u _1^{2}-I & 2u _1u _2 \\ 2u _1u _2 & 2u _2^{2} - 1 \end{bmatrix}$

2) The matrix has the form $\begin{bmatrix} a & b \\ b & -a \end{bmatrix}$ where $a^{2} + b^{2} = 1$ and $\begin{bmatrix} a \\ b \end{bmatrix} = \text{ref}_{L}\left( \vec{e}_1 \right) $

Example

Calculate the matrix $\begin{bmatrix} 0 & 1 \\ 1 & 0 \end{bmatrix}$ that yields reflection about the line $y=x$.

$2 \text{proj}_{L}\left( \vec{x} \right) - \vec{x}$

$2 \begin{bmatrix} \frac{1}{2} & \frac{1}{2} \\ \frac{1}{2} & \frac{1}{2} \end{bmatrix} - \begin{bmatrix} 1 & 0 \\ 0 & 1 \end{bmatrix} = \begin{bmatrix} 1-1 & 1 \\ 1 & 1-1 \end{bmatrix} = \begin{bmatrix} 0 & 1 \\ 1 & 0 \end{bmatrix}$

Example

Let $L$ by the $y$-axis, i.e. $L = \text{span}\{ \begin{bmatrix} 0 \\ 1 \end{bmatrix} \}$.

Find $\text{ref}_{L}\left( \vec{e}_1 \right)$ and the matrix of reflection about the line $L$.

$\text{ref}_{L} \left( \vec{e}_1 \right) = \begin{bmatrix} a \\ b \end{bmatrix}$

Matrix: $\begin{bmatrix} a & b \\ b & -a \end{bmatrix}$

$\text{ref} _{L}\left( \vec{e} _{1} \right) = 2 \text{proj} _{L} \left( \vec{e} _1 \right) - \vec{e} _1$

$= 2 \left( \frac{\vec{e}_1 \cdot \vec{e}_2}{\vec{e}_2 \cdot \vec{e}_2} \right) \vec{e}_2 - \vec{e}_1 = 2 \left( \frac{0}{1} \right) \vec{e}_2 - \vec{e}_1 = \begin{bmatrix} -1 \\ 0 \end{bmatrix}$

$A = \begin{bmatrix} -1 & 0 \\ 0 & 1 \end{bmatrix}$

Scaling

For $k > 0,\ T(\vec{x}) = k \vec{x}$.

\[\begin{bmatrix} k & 0 \\ 0 & k \end{bmatrix}\]- $k > 1$ : Dilation

- $0 < k < 1$ : Contraction

Question: Can we interpret the transformation $T(\vec{x}) = \begin{bmatrix} 0 & -1 \\ 1 & 0 \end{bmatrix} \vec{x}$ geometrically?

Answer: Rotation counterclockwise by $\frac{\pi}{2}$ or 90 degrees.

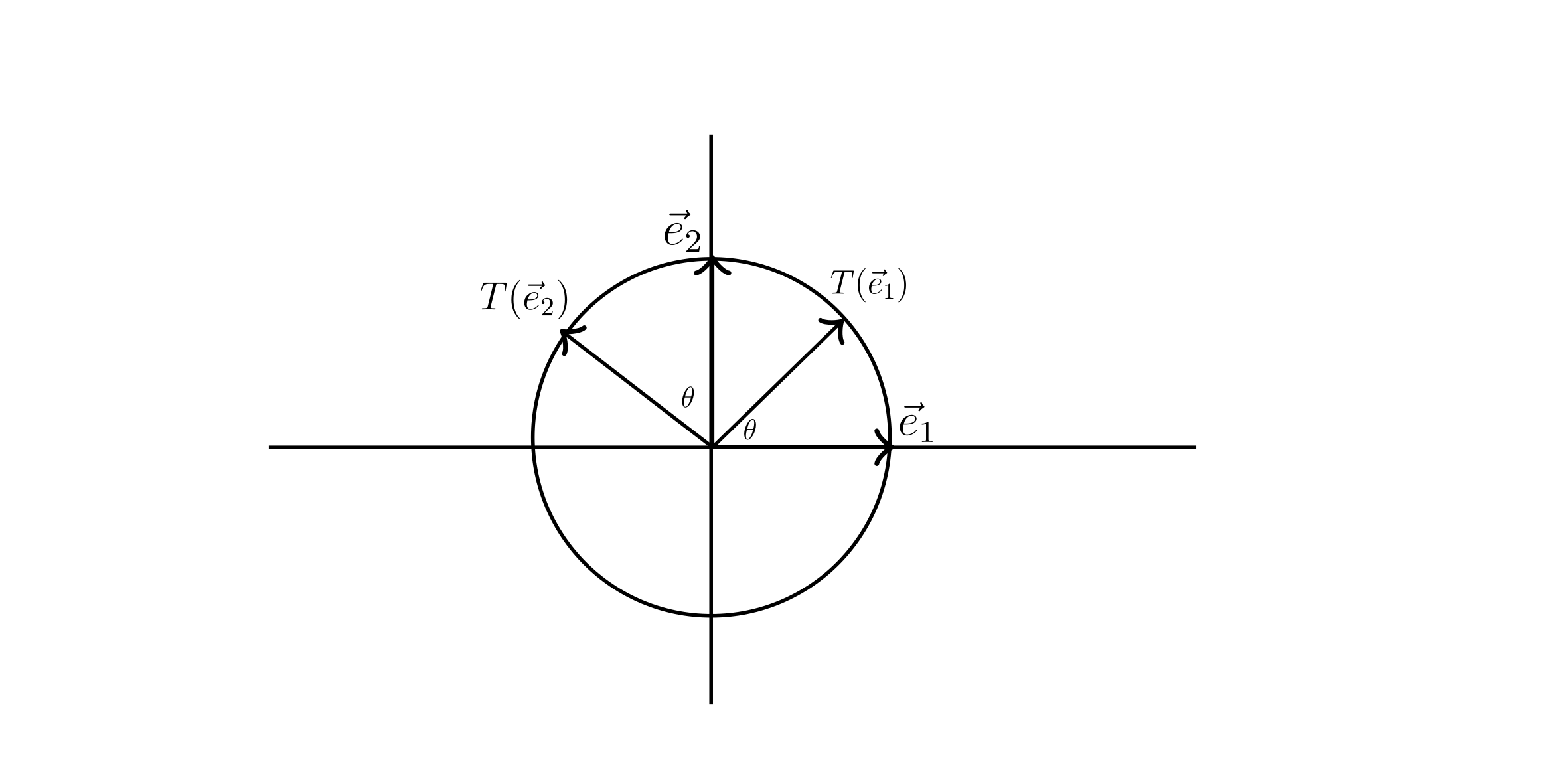

Rotation

Counterclockwise by angle $\theta$.

$T\left( \vec{e}_1 \right) = \begin{bmatrix} \cos \theta \\ \sin \theta \end{bmatrix}$

$T\left( \vec{e}_2 \right) = \begin{bmatrix} \cos \left( \theta + \frac{\pi}{2} \right) \\ \sin \left( \theta + \frac{\pi}{2} \right) \end{bmatrix} = \begin{bmatrix} -\sin \theta \\ \cos \theta \end{bmatrix} $

$\therefore A = \begin{bmatrix} \cos \theta & - \sin \theta \\ \sin \theta & \cos \theta \end{bmatrix}$

Transformation Recap:

| Transformation | Matrix |

|---|---|

| Scaling (by $k$) | $kI_2 = \begin{bmatrix} k & 0 \\ 0 & k \end{bmatrix} $ |

| Orthogonal projection onto line $L$ | $\begin{bmatrix} u_1^2 & u_1u_2 \\ u_1u_2 & u_2^2 \end{bmatrix} $, where $\begin{bmatrix} u_1 \\ u_2 \end{bmatrix}$ is a unit vector parallel to $L$ |

| Reflection about a line | $\begin{bmatrix} a & b \\ b & -a \end{bmatrix}$, where $a^2 + b^2 = 1$ |

| Rotation through angle $\theta$ | $\begin{bmatrix} \cos \theta & - \sin \theta \\ \sin \theta & \cos \theta \end{bmatrix}$ or $\begin{bmatrix} a & -b \\ b & a \end{bmatrix} $, where $a^2 + b^2 = 1$ |

| Rotation through angle $\theta$ combined with scaling by $r$ | $\begin{bmatrix} a & -b \\ b & a \end{bmatrix} = r \begin{bmatrix} \cos \theta & - \sin \theta \\ \sin \theta & \cos \theta \end{bmatrix}$ |

| Horizontal shear | $\begin{bmatrix} 1 & k \\ 0 & 1 \end{bmatrix} $ |

| Vertical shear | $\begin{bmatrix} 1 & 0 \ k & 1 \end{bmatrix} $ |

2.3 Matrix Products

Rotation combined with scaling. Suppose

- $T_1 \mathbb{R}^2 \to \mathbb{R}^2$ gives rotation counter clockwise by angle $\theta$

- $T_2 \mathbb{R}^2 \to \mathbb{R}^2$ Scales by $k > 0$

This is in the form $T_2 (T_1(\vec{x}))$

\[T_2 T_1 : \mathbb{R}^2 \to \mathbb{R}^2 \text{ function composition}\] \[(T_2 T_1)(\vec{x}) = k \begin{bmatrix} \cos \theta & - \sin \theta \\ \sin \theta & \cos \theta \end{bmatrix} \vec{x}\]What is the matrix?

\[\begin{bmatrix} k\cos \theta & -k \sin \theta \\ k \sin \theta & k \cos \theta \end{bmatrix} = \begin{bmatrix} k & 0 \\ 0 & k \end{bmatrix} \begin{bmatrix} \cos \theta & -\sin \theta \\ \sin \theta & \cos \theta \end{bmatrix}\] \[\text{Composition of Transformations} \leftrightarrow \text{Matrix Product}\]The matrix product BA: Suppose $B$ is an $n\times p$ matrix and $A$ is a $p \times m$ matrix.

Size of $BA$: $[n \times p] [p\times m] \to n\times m$

Columns of the product $BA$: Suppose $A = \begin{bmatrix} | & | & & | \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_m \\ | & | & & | \end{bmatrix}$

\[BA = \begin{bmatrix} | & | & & | \\ B\vec{v}_1 & B\vec{v}_2 & \cdots & B\vec{v}_m \\ | & | & & | \\ \end{bmatrix}\]Entries of $BA$ are dot products.

(i, j) - entry of BA = [row i of B] * [Column j of A]

Example

\[\begin{bmatrix}

1 & 3 & -1 \\

2 & 0 & 1

\end{bmatrix}

\begin{bmatrix}

1 & 3 \\

2 & 0 \\

0 & -1

\end{bmatrix}

=

\begin{bmatrix}

7 & 4 \\

2 & 5

\end{bmatrix}\]

Rows of the product $BA$ [ith row of BA] = [ith row of B] A

Example

\[\begin{bmatrix}

2 & 0 & 1

\end{bmatrix}

\begin{bmatrix}

1 & 3 \\

2 & 0 \\

0 & -1

\end{bmatrix}

=

\begin{bmatrix}

2 & 5

\end{bmatrix}\]

Example

Suppose $A = \begin{bmatrix} 5 & 3 & 2 & 0 \end{bmatrix}$ and $B = \begin{bmatrix} 1 \\ -1 \\ 2 \\ -3 \end{bmatrix}$. Find $AB$ and $BA$.

\[AB = 5 - 3 + 4 + 0 = \begin{bmatrix} 6 \end{bmatrix}\] \[BA = \begin{bmatrix} 1 \\ -1 \\ 2 \\ -3 \end{bmatrix} \begin{bmatrix} 5 & 3 & 2 & 0 \end{bmatrix} = \begin{bmatrix} 5 & 3 & 2 & 0 \\ -5 & -3 & -2 & 0 \\ 10 & 6 & 4 & 0 \\ -15 & -9 & -6 & 0 \end{bmatrix}\]Notice by these examples that $AB \neq BA$ (they are not even the same size).

Example

Let $A = \begin{bmatrix} 2 & 1 \\ -3 & 0 \end{bmatrix}$, $B = \begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix}$, and $C = \begin{bmatrix} 0 & 1 \\ -1 & 0 \end{bmatrix}$. Show that $A(B+C) = AB + AC$

\[\begin{bmatrix} 2 & 1 \\ -3 & 0 \end{bmatrix} \left( \begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} + \begin{bmatrix} 0 & 1 \\ -1 & 0 \end{bmatrix} \right) = \begin{bmatrix} 2 & 1 \\ -3 & 0 \end{bmatrix} \begin{bmatrix} 1 & 1 \\ 0 & 0 \end{bmatrix} = \begin{bmatrix} 2 & 2 \\ -3 & -3 \end{bmatrix}\]Properties

- $A(B+C) = AB + AC$ and $(C+D)A = CA + DA$

- $I_nA = AI_m = A$

- $K(AB) = (KA)B = A(KB)$

- $A(BC) = (AB)C$

Be Careful!

- $AB \neq BA$ generally even if they are the same size

- If $AB = AC$, it does not generally follow that $B=C$

- If $AB=0$, it does not generally follow that $A=0$ or $B=0$

Example

\[\begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} \begin{bmatrix} 4 & 1 \\ 1 & 1 \end{bmatrix} =

\begin{bmatrix} 4 & 1 \\ 4 & 1 \end{bmatrix}\]

and

\[\begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} \begin{bmatrix} 4 & 1 \\ -1 & 2 \end{bmatrix} = \begin{bmatrix} 4 & 1 \\ 4 & 1 \end{bmatrix}\]

Example

\[\begin{bmatrix} 4 & 1 \\ 1 & 1 \end{bmatrix} \begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} = \begin{bmatrix} 5 & 0 \\ 2 & 0 \end{bmatrix}\]

Example

\[\begin{bmatrix}

2 & 0 \\

0 & 0 \\

-4 & 0

\end{bmatrix}

\begin{bmatrix}

0 & 0 \\

1 & 6

\end{bmatrix}

=

\begin{bmatrix}

0 & 0 \\

0 & 0 \\

0 & 0

\end{bmatrix}\]

Definition: For matrices $A$ and $B$, we say $A$ and $B$ commute provided $AB = BA$. Note that both $A$ and $B$ must be $n \times n$.

- We see $\begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix}$ an $\begin{bmatrix} 4 & 1 \\ 1 & 1 \end{bmatrix}$ do not commute.

- $I_n$ commutes with any $n \times n$ matrix

Example

\[\begin{bmatrix} a & b \\ c & d \end{bmatrix} \begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} =

\begin{bmatrix} a + b & 0 \\ c+d & 0 \end{bmatrix}\]

\[\begin{bmatrix} 1 & 0 \\ 1 & 0 \end{bmatrix} \begin{bmatrix} a & b \\ c & d \end{bmatrix} =

\begin{bmatrix} a & b \\ a & b \end{bmatrix}\]

$a+b = a$

$c+d = a$

$b = 0$

$b=0$

\[\begin{bmatrix} c+d & 0 \\ c & d \end{bmatrix}\]

Example

Find all matrices that commute with $\begin{bmatrix} 2 & 0 & 0 \\ 0 & 3 & 0 \\ 0 & 0 & 4 \end{bmatrix} $

\[\begin{bmatrix} 2 & 0 & 0 \\ 0 & 3 & 0 \\ 0 & 0 & 4 \end{bmatrix} \begin{bmatrix} a & b & c \\ d & e & f \\ g & h & i \end{bmatrix} = \begin{bmatrix} 2a & 2b & 2c \\ 3d & 3e & 3f \\ 4g & 4h & 4i \end{bmatrix}\] \[\begin{bmatrix} a & b & c \\ d & e & f \\ g & h & i \end{bmatrix} \begin{bmatrix} 2 & 0 & 0 \\ 0 & 3 & 0 \\ 0 & 0 & 4 \end{bmatrix} = \begin{bmatrix} 2a & 3b & 4c \\ 2d & 3e & 4f \\ 2g & 3h & 4i \end{bmatrix}\]$2b = 3b$

$2c = 4c$

$3d = 2d$

$3f = 4f$

$4g = 2g$

$4h = 3h$

\[\begin{bmatrix} a & 0 & 0 \\ 0 & e & 0 \\ 0 & 0 & i \end{bmatrix}\]Power of a Matrix

Suppose $A$ is $n \times n$. For $k \ge 1$ integer, define the $k$th power of $A$.

\[A^k = \underbrace{AAAA \cdots A}_{k \text{ times}}\]Properties:

- $A^pA^q = A^{p+q}$

- $\left( A^{p} \right)^{q} = A^{pq}$

Example

$A = \begin{bmatrix} 0 & 1 & 2 \\ 0 & 0 & -1 \\ 0 & 0 & 0 \end{bmatrix}$. Find $A^{2}$, $A^{3}$. What is $A^{k}$ for $k > 3$?

\[A^2 = \begin{bmatrix} 0 & 1 & 2 \\ 0 & 0 & -1 \\ 0 & 0 & 0 \end{bmatrix} \begin{bmatrix} 0 & 1 & 2 \\ 0 & 0 & -1 \\ 0 & 0 & 0 \end{bmatrix} = \begin{bmatrix} 0 & 0 & -1 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix}\] \[A^3 = \begin{bmatrix} 0 & 1 & 2 \\ 0 & 0 & -1 \\ 0 & 0 & 0 \end{bmatrix} \begin{bmatrix} 0 & 0 & -1 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix} = \begin{bmatrix} 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix}\]Note that $A^3 = 0$, but $A \neq 0$.

$\text{rank}\left( A \right) = 2$ $\text{rank}\left( A^{2} \right) = 1$ $\text{rank}\left( A^{3} \right) = 0$

Example

\[\begin{bmatrix}

a & 0 & 0 \\

0 & b & 0 \\

0 & 0 & c

\end{bmatrix}

\begin{bmatrix}

a & 0 & 0 \\

0 & b & 0 \\

0 & 0 & c

\end{bmatrix}

=

\begin{bmatrix}

a^2 & 0 & 0 \\

0 & b^2 & 0 \\

0 & 0 & c^2

\end{bmatrix}\]

Exam 1

Will most likely have a “find all matrices that commute with” question

100 minutes

Practice Quiz 2

1) Compute the product $A \vec{x}$ using paper and pencil: $\begin{bmatrix} 1 & 3 \\ 1 & 4 \\ -1 & 0 \\ 0 & 1 \end{bmatrix} \begin{bmatrix} 1 \\ -2 \end{bmatrix}$.

\[1 \begin{bmatrix} 1 \\ 1 \\ -1 \\ 0 \end{bmatrix} - 2 \begin{bmatrix} 3 \\ 4 \\ 0 \\ 1 \end{bmatrix} = \begin{bmatrix} -5 \\ -7 \\ -1 \\ -2 \end{bmatrix}\]2) Let $A$ be a $6 \times 3$ matrix. We are told that $A \vec{x} = \vec{0}$ has a unique solution.

a) What is the reduced row-echelon form of $A$? b) Can $A\vec{x} = \vec{b}$ be an inconsistent system for some $\vec{b} \in \mathbb{R}^6$? Justify your answer. c) Can $A\vec{x} = \vec{b}$ have infinitely many solutions for some $\vec{b} \in \mathbb{R}^6$? Justify your answer.

Solution

a)

\[\begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix}\]b) Yes; we can have $\begin{bmatrix} 0 & 0 & 0 & \big| & c \end{bmatrix}$ where $c\neq 0$ in $\text{ref}\begin{bmatrix} a & \big| & \vec{b} \end{bmatrix}$.

c) No; there are no free variables

3) Let $\vec{w} = \begin{bmatrix} -2 \\ 2 \\ 0 \\ 1 \end{bmatrix}$, $L = \text{span}\left( \vec{w} \right)$, and $\vec{x} = 3 \vec{e}_3 \in \mathbb{R}^4$. Show your work

a) Find $\vec{x}^{\parallel} = \text{proj}_L \left( \vec{x} \right)$, the projection of $\vec{x}$ onto $L$. b) Find $\vec{x}^{\bot}$, the component of $\vec{x}$ orthogonal to $L$.

Solution

a) $\text{proj}_L \left( \vec{x} \right) = \left( \frac{\vec{x} \cdot \vec{w}}{\vec{w} \cdot \vec{w}} \right) \vec{w}$

$\vec{x} \cdot \vec{w} = 0 + 6 + 0 + 0 = 6$

$\vec{w} \cdot \vec{w} = 4 + 4 + 0 + 1 = 9$

$\text{proj}_{L} \left( \vec{x} \right) = \frac{2}{3} \begin{bmatrix} -2 \\ 2 \\ 0 \\ 1 \end{bmatrix} = \begin{bmatrix} -\frac{4}{3} \\ \frac{4}{3} \\ 0 \\ \frac{2}{3} \end{bmatrix}$

b) $\vec{x}^{\bot} = \vec{x} - \text{proj}_L \left( \vec{x} \right)$

$= \begin{bmatrix} 0 \\ 3 \\ 0 \\ 0 \end{bmatrix} - \begin{bmatrix} -\frac{4}{3} \\ \frac{4}{3} \\ 0 \\ \frac{2}{3} \end{bmatrix} = \begin{bmatrix} \frac{4}{3} \\ \frac{5}{3} \\ 0 \\ -\frac{2}{3} \end{bmatrix}$

4) Suppose $T_1 : \mathbb{R}^{2} \to \mathbb{R}^{3}$ is given by $T_1 \left( \begin{bmatrix} x \\ y \end{bmatrix} \right) = \begin{bmatrix} 0 \\ x - y \\ 3y \end{bmatrix}$ and $T_2 : \mathbb{R}^{2} \to \mathbb{R}^{2}$ is a scaling transformation with $T_2 \left( \begin{bmatrix} 1 \\ 7 \end{bmatrix} \right) = \begin{bmatrix} 3 \\ 21 \end{bmatrix}$. Show your work

a) Find the matrix of the transformation $T_1$. b) Find the matrix of the transformation $T_2$.

Solution

a) $\begin{bmatrix} | & | \\ T\left( \vec{e}_1 \right) & T\left( \vec{e}_2 \right) \\ | & | \end{bmatrix}$

$T \begin{bmatrix} 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix}$, $T \begin{bmatrix} 0 \\ 1 \end{bmatrix} = \begin{bmatrix} 0 \\ -1 \\ 3 \end{bmatrix}$

$A = \begin{bmatrix} 0 & 0 \\ 1 & -1 \\ 0 & 3 \end{bmatrix}$

b) Scaling by $k=3$

$\begin{bmatrix} 3 & 0 \\ 0 & 3 \end{bmatrix}$

5) Let $T : \mathbb{R}^{2} \to \mathbb{R}^{3}$ be a linear transformation such that $T \left( 2 \vec{e}_1 \right) = \begin{bmatrix} 2 \\ 2 \\ 2 \end{bmatrix}$ and $T \left( \vec{e}_1 + \vec{e}_2 \right) = \begin{bmatrix} 2 \\ 3 \\ 4 \end{bmatrix}$. Find $T \left( \vec{e}_1 \right)$ and $T \left( \vec{e}_2 \right)$. Show your work.

$T \left( 2 \vec{e}_1 \right) = 2 T \left( \vec{e}_1 \right) = \begin{bmatrix} 2 \\ 2 \\ 2 \end{bmatrix}$

$T \left( \vec{e}_1 \right) = \begin{bmatrix} 1 \\ 1 \\ 1 \end{bmatrix}$

$T \left( \vec{e}_1 + \vec{e}_2 \right) = T \left( \vec{e}_1 \right) + T \left( \vec{e}_2 \right) = \begin{bmatrix} 2 \\ 3 \\ 4 \end{bmatrix}$

$T \left( \vec{e}_2 \right) = \begin{bmatrix} 2 \\ 3 \\ 4 \end{bmatrix} - T \left( \vec{e}_1 \right) = \begin{bmatrix} 1 \\ 2 \\3 \end{bmatrix}$

2.4 Inverse of a Linear Transformation

In Math1365 (or other courses), you see diagrams for $f : X \to Y$ function.

Definition:

We say the function $f : X \to Y$ is invertible provided for each $y$ in $Y$, there is a unique $x$ in $X$ with $f(x) = y$ if any only if $f^{-1} : Y \to X$ is a function $f^{-1}(y) = x$ provided $f(x) = y$.

Same notation for linear transformation $T : \mathbb{R}^{n} \to \mathbb{R}^{n}$

A square $n \times n$ matrix $A$ is invertible provided the map $T \left( \vec{x} \right) = A \vec{x}$ is invertible. The matrix for $T^{-1}$ is denoted $A^{-1}$.

Note:

- $T T^{-1} (\vec{y}) = \vec{y}$ for any $\vec{y}$ in $\mathbb{R}^{n}$

- $T^{-1}T(\vec{x}) = \vec{x}$ for any $\vec{x}$ in $\mathbb{R}^{n}$

- $AA^{-1} = I_{n}$ and $A^{-1}A = I_{n}$

$A$ invertible means $A\vec{x} = \vec{b}$ has a unique solution for every $\vec{b}$ in $\mathbb{R}^{n}$.

- The unique solution is $\vec{x} = A^{-1}\vec{b}$

For our discussion of rank: $A$ is invertible is equivalent to…

- $\text{rank}(A) = n$

- $\text{rref}(A) = I_n$

- The only solution to $A\vec{x} = \vec{0}$ is $\vec{x} = \vec{0}$

How to find $A^{-1}$ if $A$ is $n \times n$,

- Form the $n \times \left( 2n \right)$ matrix $\begin{bmatrix} A & \big| & I \end{bmatrix}$

- Perform elementary row operations to find $\text{rref} \begin{bmatrix} A & \big| & I \end{bmatrix}$

Then,

- If $\text{rref} \begin{bmatrix} A & \big| & I \end{bmatrix} = \begin{bmatrix} I & \big| & B\end{bmatrix}$ then $B = A^{-1}$.

- If $\text{rref} \begin{bmatrix} A & \big| & I \end{bmatrix}$ is not of this form then $A$ is not invertible.

Example

$A = \begin{bmatrix} 2 & 3 \\ 1 & 1 \end{bmatrix}$. Find $A^{-1}$.

\[\begin{bmatrix} 2 & 3 & \big| & 1 & 0 \\ 1 & 1 & \big| & 0 & 1 \end{bmatrix} \to \begin{bmatrix} 1 & 1 & \big| & 0 & 1 \\ 2 & 3 & \big| & 1 & 0 \end{bmatrix} \to\] \[\begin{bmatrix} 1 & 1 & \big| & 0 & 1 \\ 0 & 1 \big| & 1 & -2 \end{bmatrix} \to \begin{bmatrix} 1 & 0 & \big| & -1 & 3 \\ 0 & 1 & \big| & 1 & -2 \end{bmatrix}\] \[A^{-1} = \begin{bmatrix} -1 & 3 \\ 1 & -2 \end{bmatrix}\]

Example

$A = \begin{bmatrix} 2 & 2 \ 1 & 1 \end{bmatrix}$. Find $A^{-1}$.

\[\begin{bmatrix} 2 & 2 & \big| & 1 & 0 \end{bmatrix} \to \begin{bmatrix} 1 & 1 & \big| & 0 & 1 \end{bmatrix} \to \begin{bmatrix} 1 & 1 & \big| & 0 & 1 \\ 0 & 0 & \big| & 1 & -2 \end{bmatrix}\]$A$ is not invertible

Example

$A = \begin{bmatrix} 1 & 3 & 1 \\ 1 & 4 & 1 \\ 2 & 0 & 1 \end{bmatrix}$. Find $A^{-1}$.

\[\begin{bmatrix} 2 & 2 & \big| & 1 & 0 \\ 1 & 1 & \big| & 0 & 1 \\ \end{bmatrix} \to \begin{bmatrix} 1 & 3 & 1 & \big| & 1 & 0 & 0 \\ 0 & 1 & 0 & \big| & -1 & 1 & 0 \\ 0 & -6 & -1 & \big| & -2 & 0 & 1 \end{bmatrix}\] \[\to \begin{bmatrix} 1 & 3 & 1 & \big| & 1 & 0 & 0 \\ 0 & 1 & 0 & \big| & -1 & 1 & 0 \\ 0 & 0 & -1 & \big| & -8 & 6 & 1 \end{bmatrix}\] \[\to \begin{bmatrix} 1 & 3 & 1 & \big| & 1 & 0 & 0 \\ 0 & 1 & 0 & \big| & -1 & 1 & 0 \\ 0 & 0 & 1 & \big| & 8 & -6 & -1 \end{bmatrix} \to \begin{bmatrix} 1 & 3 & 0 & \big| & -7 & 6 & 1\\ 0 & 1 & 0 & \big| & -1 & 1 & 0 \\ 0 & 0 & 1 & \big| & 8 & -6 & -1 \end{bmatrix}\] \[\to \begin{bmatrix} 1 & 0 & 0 & \big| & -4 & 3 & 1 \\ 0 & 1 & 0 & \big| & -1 & 1 & 0 \\ 0 & 0 & 1 & \big| & 8 & -6 & -1 \end{bmatrix}\] \[A^{-1} = \begin{bmatrix} -4 & 3 & 1 \\ -1 & 1 & 0 \\ 8 & -6 & -1 \end{bmatrix}\]

Example

Find all solutions to the system $A\vec{x} = \vec{b}$ where $A = \begin{bmatrix} 1 & 3 & 1 \\ 1 & 4 & 1 \\ 2 & 0 & 1 \end{bmatrix}$ and $\vec{b} = \begin{bmatrix} 1 \\ -1 \\ 0 \end{bmatrix}$

\[A^{-1} = \begin{bmatrix} -4 & 3 & 1 \\ -1 & 1 & 0 \\ 8 & -6 & -1 \end{bmatrix}\] \[\vec{x} = A^{-1}\vec{b} = \begin{bmatrix} -4 & 3 & 1 \\ -1 & 1 & 0 \\ 8 & -6 & -1 \end{bmatrix} \begin{bmatrix} 1 \\ -1 \\ 0 \end{bmatrix} = \begin{bmatrix} -7 \\ -2 \\ 14 \end{bmatrix}\]Theorem:

Let $A$, $B$ be $n \times n$ matrices with $BA = I_n$ then,

- $A$, $B$ are both invertible

- $A^{-1} = B$ and $B^{-1} = A$

- $AB = I_n$

Proof of 1) Assume $A$, $B$ are $n\times n$ matrices with $BA = I_n$. Suppose $A\vec{x} = \vec{0}$. Show $\vec{x}=0$. Multiply by $B$: $BA\vec{x} = B\vec{0}$ rewriting $I\vec{x} = \vec{0}$ meaning $\vec{x} = \vec{0}$. Thus, $A$ is invertible. Then, $BA A^{-1} = IA^{-1}$ and $B = A^{-1}$. $B$ is invertible.

Using the theorem:

If $A$, $B$ are $n\times n$ invertible matrices then so is $BA$ and $\left( BA \right) ^{-1} = A^{-1}B^{-1}$.

Proof: $\left( BA \right) \left( A^{-1}B^{-1} \right) = B\left( A A^{-1} \right) B^{-1} = BIB^{-1} = B B^{-1} = I$.

Exercise: Suppose $A$ is an $n\times n$ invertible matrix.

Is $A^{2}$ invertible? If so, what is $\left( A^{-2} \right) ^{-1}$?

Yes; $A^{-1}A^{-1} = \left( A^{-1} \right) ^{2}$

Is $A^{3}$ invertible? If so, what is $\left( A^{3} \right)^{-1}$?

Yes; $\left( A^{-1} \right) ^{3}$

$\left( A AA \right) \left( A^{-1}A^{-1}A^{-1} \right) = A A A^{-1}A^{-1} = A A^{-1} = I$

Back to $2\times 2$ matrices: We saw

- For $A = \begin{bmatrix} 2 & 3 \\ 1 & 1 \end{bmatrix}$, $A^{-1} = \begin{bmatrix} -1 & 3 \\ 1 & -2 \end{bmatrix}$.

- The matrix $\begin{bmatrix} 2 & 2 \\ 1 & 1 \end{bmatrix}$ is not invertible

Theorem: Consider a $2\times 2$ matrix $A = \begin{bmatrix} a & b \\ c & d \end{bmatrix}$.

$A$ is invertible if and only if $ad - bc \neq 0$

If $A$ is invertible, then $A^{-1}$ = $\frac{1}{ad-bc} \begin{bmatrix} d & -b \\ -c & a \end{bmatrix}$

The number $ad - bc$ is a determinant of $A = \begin{bmatrix} a & b \\ c& d \end{bmatrix}$.

Example

$A = \begin{bmatrix} 4 & 7 \\ 0 & 1 \end{bmatrix}$. Find $\text{det}(A)$ and $A^{-1}$.

$\text{det}(A) = 4 - 0 = 4$

$A^{-1} = \frac{1}{4} \begin{bmatrix} 1 & -7 \\ 0 & 4 \end{bmatrix}$

3.1 Image and Kernel of a Linear Transformation

Definition:

Let $T : \mathbb{R}^{m} \to \mathbb{R}^{n}$ be a linear transformation.

The Image of $T$, denoted $\text{im}\left( T \right)$ : $\text{im}\left( T \right) = \{T \left( \vec{x} \right) : x \in \mathbb{R}^{m} \} \subseteq \mathbb{R}^{n}$

The kernel of $T$ $\text{ker}\left( T \right)$ : $\text{ker}\left( T \right) = \{ \vec{x} \in \mathbb{R}^{m} : T \left( \vec{x} \right) = \vec{0} \} \subseteq \mathbb{R}^{m}$

Example

What is $\text{ker} \left( T \right)$ and $\text{im}\left( T \right)$ when $T : \mathbb{R}^{2} \to \mathbb{R}^{2}$ is

1) Projection onto the line $y = -x$. 2) Reflection about the line $y = -x$.

Solution

1)

$\vec{w} = \begin{bmatrix} -1 \\ 1 \end{bmatrix}$

$L = \text{span}\left( \begin{bmatrix} -1 \\ 1 \end{bmatrix} \right) $

$\text{proj}_{L} \left( \vec{x} \right) = \left( \frac{\vec{w} \cdot \vec{w}}{\vec{w} \cdot \vec{w}} \right) \vec{w}$

$\vec{x}$ is in $\text{ker}\left( T \right)$ provided $\vec{x} \cdot \begin{bmatrix} -1 \\ 1 \end{bmatrix} = \vec{0}$

$\text{ker}\left( T \right) = \{ \begin{bmatrix} x_1 \\ x_2 \end{bmatrix} : -x_1 + x_2 = 0 \}$

$\text{im}\left( T \right) = L$

2) $\text{ker}\left( T \right) = \{ \vec{0} \}$

$\text{im}\left( T \right) = \mathbb{R}^{2}$

Suppose $T : \mathbb{R}^{m} \to \mathbb{R}^{n}$ is a linear transformation. There is an $n \times m$ matrix $A = \begin{bmatrix} | & | & & | \\ \vec{a}_1 & \vec{a}_2 & \cdots & \vec{a}_m \\ | & | & & | \end{bmatrix}$ such that $T \left( \vec{x} \right) = A \vec{x}$ for all $\vec{x}$ in $\mathbb{R}^{m}$.

Image of $T$ (Also written $\text{im}\left( A \right)$):

$\text{im}\left( T \right) = \{ A\vec{x} : \vec{x} \in \mathbb{R}^{m} = \{ x_1\vec{a}_1 + x_2\vec{a}_2 + \cdots + x_m\vec{a}_m : x_i \in \mathbb{R} = \{ \text{all linear combinations of } \vec{a}_1,\ \vec{a}_2,\ \cdots ,\ \vec{a}_m \} = \text{span}\left( \vec{a}_1,\ \vec{a}_2,\ \cdots, \vec{a}_m \right)$

Kernel of $T$ (Also written $\text{ker}\left( A \right)$:

$\text{ker}\left( T \right) = \{ x \in \mathbb{R}^{m} : A\vec{x} = \vec{0} \} = \{ \text{all solutions to } A\vec{x} = \vec{0} \}$

Example

Find vectors that span the kernel of $\begin{bmatrix} 1 & -3 \\ -3 & 9 \end{bmatrix}$.

\[\begin{bmatrix} 1 & -3 & \big| & 0 \\ -3 & 9 & \big| & 0 \end{bmatrix} \to \begin{bmatrix} 1 & -3 & \big| & 0 \\ 0 & 0 & \big| & 0 \end{bmatrix}\]$x_2 = t$

$x_1 - 3t = 0$

$x_1 = 3t$

$\begin{bmatrix} 3t \\ t \end{bmatrix} = t \begin{bmatrix} 3 \\ 1 \end{bmatrix}$

$\text{ker}\left( A \right) = \text{span} \{ \begin{bmatrix} 3 \\ 1 \end{bmatrix} \}$

Example

Find vectors that span the kernel of $\begin{bmatrix} 1 & 3 & 0 & 5 \\ 2 & 6 & 1 & 16 \\ 5 & 15 & 0 & 25 \end{bmatrix}$.

\[\begin{bmatrix} 1 & 3 & 0 & 5 \\ 2 & 6 & 1 & 16 \\ 5 & 15 & 0 & 25 \end{bmatrix} \to \begin{bmatrix} 1 & 3 & 0 & 5 \\ 0 & 0 & 1 & 6 \\ 0 & 0 & 0 & 0 \end{bmatrix}\]$x_2 = t$

$x_4 = r$

$x_1 = -3t - 5r$

$x_3 = -6r$

\[\begin{bmatrix} -3t - 5t \\ t \\ -6r \\ r \end{bmatrix} = t \begin{bmatrix} -3 \\ 1 \\ 0 \\ 0 \end{bmatrix} + r \begin{bmatrix} -5 \\ 0 \\ -6 \\ 1 \end{bmatrix} = \text{span} \{ \begin{bmatrix} -3 \\ 1 \\ 0 \\ 0 \end{bmatrix}, \begin{bmatrix} -5 \\ 0 \\ -6\\ 1 \end{bmatrix} \}\]

Example

Find vectors that span the kernel of $\begin{bmatrix} 1 & 1 & -2 \\ -1 & -1 & 2 \end{bmatrix}$

\[\begin{bmatrix} 1 & 1 & -2 \\ -1 & -1 & 2 \end{bmatrix} \to \begin{bmatrix} 1 & 1 & -2 \\ 0 & 0 & 0 \end{bmatrix}\]$x_1 = -r + 2s$

$x_2 = r$

$x_3 = s$

\[\begin{bmatrix} -r + 2s \\ r \\ s \end{bmatrix} = r \begin{bmatrix} -1 \\ 1 \\ 0 \end{bmatrix} + s \begin{bmatrix} 2 \\ 0 \\ 1 \end{bmatrix}\] \[\text{ker}(A) = \text{span} \{ \begin{bmatrix} -1 \\ 1 \\ 0 \end{bmatrix}, \begin{bmatrix} 2 \\ 0 \\1 \end{bmatrix} \}\]Properties of the kernel:

- $\vec{0} \in \text{ker}\left( A \right)$

- If $\vec{v}_1$, $\vec{v}_2 \in \text{ker}\left( A \right)$, then $\vec{v}_1 + \vec{v}_2 \in \text{ker}\left( A \right)$. Closed under addition.

- If $\vec{v} \in \text{ker}\left( A \right)$ then $k\vec{v} \in \text{ker}\left( A \right)$. Closed under scaler multiplication

Proof:

- $A\vec{0} = \vec{0}$

- If $A\vec{v}_1 = \vec{0}$ and $A\vec{v}_2 = \vec{0}$, then $A \left( \vec{v}_1 + \vec{v}_2\right) = A\vec{v}_1 + A \vec{v}_2 = \vec{0} + \vec{0} = \vec{0}$

- If $A\vec{v}$, then $A\left( k\vec{v} \right) = kA\vec{v} = k\vec{0} = \vec{0}$.

Give as few vectors as possible!!

Example

$A = \begin{bmatrix} 1 & -3 \\ -3 & 9 \end{bmatrix}$

$\text{rref}(A) = \begin{bmatrix} 1 & -3 \\ 0 & 0 \end{bmatrix}$

$x \begin{bmatrix} 1 \\ -3 \end{bmatrix} + y \begin{bmatrix} -3 \\ 9 \end{bmatrix} = \left( x - 3y \right) \begin{bmatrix} 1 \\ -3 \end{bmatrix}$

$\text{im}(A) = \text{span}\left( \begin{bmatrix} 1 \\ -3 \end{bmatrix} \right)$

Example

$A = \begin{bmatrix} 1 & -1 & 1 & 2 \\ -2 & 2 & 0 & 0 \\ -1 & 1 & 3 & 1 \end{bmatrix}$

$\text{rref}\left( A \right) = \begin{bmatrix} 1 & -1 & 0 & 0 \\ 0 & 0 & 1 & 0 \\ 0 & 0 & 0 & 1 \end{bmatrix}$

$\text{lm}\left( A \right) = \text{span} \{ \begin{bmatrix} 1 \\ -2 \\ -1 \end{bmatrix}, \begin{bmatrix} -1 \\ 2 \\ 1 \end{bmatrix}, \begin{bmatrix} 1 \\ 0 \\ 3 \end{bmatrix}, \begin{bmatrix} 2 \\ 0 \\ 1 \end{bmatrix} \}$

$\text{im}\left( A \right) = \text{span} \{ \begin{bmatrix} 1 \\ -2 \\ -1 \end{bmatrix}, \begin{bmatrix} 1 \\ 0 \\ 3 \end{bmatrix}, \begin{bmatrix} 2 \\ 0 \\ 1 \end{bmatrix} \}$

Careful: Make sure you use columns in $A$ corresponding to leading 1’s in $\text{rref}$.

Example

$A = \begin{bmatrix} 1 & 2 & 3 \\ 1 & 2 & 3 \\ 1 & 2 & 3 \\ 1 & 2 & 3 \end{bmatrix}$

$\text{rref}\left( A \right) = \begin{bmatrix} 1 & 2 & 3 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \\ 0 & 0 & 0 \end{bmatrix}$

$\text{im}\left( A \right) = \text{span}\{ \begin{bmatrix} 1 \\ 1 \\ 1\\ 1 \end{bmatrix} \} \neq \text{span} \{ \begin{bmatrix} 1 \\ 0 \\ 0 \\ 0 \end{bmatrix} \} = \text{im} \left( \text{rref} \left( A \right) \right)$

Note: $\text{im}\left( T \right)$ or $\text{im}\left( A \right)$ is a subspace of $\mathbb{R}^{n}$.

- $\vec{0} \in \text{im}\left( A \right)$ to

- Closed under addition and scaler multiplication

Exercise

$I_3 = \begin{bmatrix} 1 & 0 & 0 \\ 0 & 1 & 0 \\ 0 & 0 & 1 \end{bmatrix}$. What is $\text{ker}\left( I_3 \right)$ and $\text{im}\left( I_3 \right)$?

$\text{ker}\left( I_3 \right) = \{ \vec{0} \}$

$\text{im}\left( I_3 \right) = \mathbb{R}^{3}$

Generally, if $A$ is $n\times n$ matrix,

$\text{im}\left( A \right) = \mathbb{R}^{n}$ if and only if $\text{ker}\left( A \right) = \{ \vec{0} \}$ if and only if $A$ is invertible.

A linear transformation $T : \mathbb{R}^{n} \to \mathbb{R}^{n}$ is invertible if and only if:

- The equation $T \left( \vec{x} \right) = \vec{b}$ has a unique solution for any $\vec{b} \in \mathbb{R}^{n}$.

- The corresponding matrix $A$ is invertible and $\left( T_A \right) ^{-1} = T_{A^{-1}}$

- There is a matrix $B$ such that $AB = I_n$. Here $B = A^{-1}$

- There is a matrix $C$ such that $CA = I_n$. Here $C = A^{-1}$.

- The equation $A\vec{x} = \vec{b}$ has a unique solution for any $\vec{b}\in \mathbb{R}^{n}$. The unique solution is given by $\vec{x} = A^{-1} \vec{b}$.

- The equation $A\vec{x} = \vec{0}$ only has zero solution.

- $\text{rref}\left( A \right) = I_n$

- $\text{rank}\left( A \right) = n$

- The image of the transformation $T$ is $\mathbb{R}^{n}$.

- The transformation $T$ is one-to-one

Basis: Spanning set with as few vectors as possible

Example

For $A = \begin{bmatrix} 1 & 2 & 0 & 1 & 2 \\ 2 & 4 & 3 & 5 & 1 \\ 1 & 2 & 2 & 3 & 0 \end{bmatrix}$, we are given $\text{rref}\left( A \right) = \begin{bmatrix} 1 & 2 & 0 & 1 & 2\\ 0 & x & y & 1 & -1 \\ 0 & 0 & 0 & 0 & 0 \end{bmatrix}$.

- Find $x$ and $y$.

- Find a basis for $\text{im}\left( A \right)$.

- Find a basis for $\text{ker}\left( A \right)$.

Solution

- $x=0$, $y=1$

- $\text{im}\left( A \right) = \text{span} \{ \begin{bmatrix} 1 \\ 2 \\ 1 \end{bmatrix}, \begin{bmatrix} 0 \\ 3 \\ 2 \end{bmatrix} \}$

- See below

$x_2 = t$

$x_4 = r$

$x_5 = s$

$x_1 = -2t - r - 2s$

$x_3 = -r + s$

\[\begin{bmatrix} -2t - r -2s \\ t \\ -r+s \\ r \\ s \end{bmatrix} = t\begin{bmatrix}-2 \\ 1 \\ 0 \\ 0 \\ 0 \end{bmatrix} + r \begin{bmatrix} -1 \\ 0 \\ -1 \\ 1 \\ 0 \end{bmatrix} + s \begin{bmatrix} -2 \\ 0 \\ 1 \\ 0 \\ 1 \end{bmatrix}\]$\text{ker}\left( A \right) = \text{span}\{ \begin{bmatrix} -2 \\ 1 \\ 0 \\ 0 \\ 0 \end{bmatrix} , \begin{bmatrix} -1 \\ 0 \\ -1 \\ 1 \\ 0 \end{bmatrix}, \begin{bmatrix} -2 \\ 0 \\ 1 \\ 0 \\ 1 \end{bmatrix} \}$

3.2 Subspaces of $\mathbb{R}^2$: Bases and Linear Independence

Definition:

For $W \subseteq \mathbb{R}^{n}$, $W$ is a subspace of $\mathbb{R}^{n}$ provided

- $\vec{0} \in W$

- If $\vec{v}_1,\ \vec{v}_2 \in W$ then $\vec{v}_1 + \vec{v}_2 \in W$

- If $\vec{v} \in W$, then $k\vec{v} \in W$ for all scalars $k$.

Which are subspaces of $\mathbb{R}^{3}$?

1) Vectors $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ with $x=y$.

- $\vec{0}$ is in set

- $\begin{bmatrix} t \\ t \\ a \end{bmatrix} + \begin{bmatrix} s \\ s \\ b \end{bmatrix} = \begin{bmatrix} t + s \\ t + s \\ a+b \end{bmatrix}$

- $k \begin{bmatrix} t \\ t \\ a \end{bmatrix} = \begin{bmatrix} kt \\ kt \\ ka \end{bmatrix}$

Yes!

2) Vectors $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ with $x=1$.

- $\begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix}$ not in set

No!

3) Vectors $\begin{bmatrix} x \\ y \\ z \end{bmatrix}$ with $xyz = 0$.

- $\begin{bmatrix} 1 \\ 0 \\ 1 \end{bmatrix} + \begin{bmatrix} 1 \\ 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 2 \\ 1 \\ 1 \end{bmatrix}$ (not in set)

No; fails property 2.

Subspaces of $\mathbb{R}^{n}$ is equivalent to $\text{span}\left( \vec{v}_1,\ \vec{v}_2,\ \cdots ,\ \vec{v}_m \right)$

Example

$A = \begin{bmatrix} 1 & 3 & 0 & 5 \\ 2 & 6 & 1 & 16 \\ 5 & 15 & 0 & 25 \end{bmatrix}$

$\text{rref}\left( A \right) = \begin{bmatrix} 1 & 3 & 0 & 5 \\ 0 & 0 & 1 & 6 \\ 0 & 0 & 0 & 0 \end{bmatrix}$

$\text{im}\left( A \right) = \text{span}\{ \begin{bmatrix} 1 \\ 2 \\ 5 \end{bmatrix}, \begin{bmatrix} 3 \\ 6 \\ 15 \end{bmatrix}, \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix}, \begin{bmatrix} 5 \\ 16 \\ 25 \end{bmatrix} \} $

Few vectors as possible: $\text{im}\left( A \right) = \{\begin{bmatrix}1 \\ 2 \\ 5 \end{bmatrix}, \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} \}$

Definition:

Consider vectors $\vec{v}_1$, $\vec{v}_2$, $\cdots$, $\vec{v}_m$ in $\mathbb{R}^{n}$.

- Vector $\vec{v} _{i}$ is redundant provided it is a linear combination of $\vec{v} _1$, $\vec{v} _2$, …, $\vec{v} _{i-1}$. ($\vec{0}$ is always redundant)

- Vectors $\vec{v}_{1}$, $\vec{v}_2$, …, $\vec{v}_m$ are linearly independent provided non of them are redundant.

- Vectors $\vec{v}_1$, $\vec{v}_2$, …, $\vec{v}_m$ are linearly dependent provided at least one vector $\vec{v}_c$ is redundant.

Example

$\{ \begin{bmatrix} 1 \\ 2 \\ 5 \end{bmatrix}, \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} , \begin{bmatrix} 3 \\ 6 \\ 15 \end{bmatrix} , \begin{bmatrix} 5 \\ 16 \\ 25 \end{bmatrix} \}$ is a linearly dependent collection because $\vec{v}_3 = 3 \vec{v}_1$ and $\vec{v}_4 = 5\vec{v}_1 + 6 \vec{v}_2$.

Linear relations:

$-3 \vec{v}_1 + \vec{v}_3 = \vec{0}$

$-5 \vec{v}_1 - 6 \vec{v}_2 + \vec{v}_4 = \vec{0}$

Generally, we consider linear relation $c_1\vec{v}_1 + c_2\vec{v}_2 + \cdots + c_m\vec{v}_m = \vec{0}$.

- We always have a trivial relation: $c_1 = c_2 = c_3 = \cdots = c_m = 0$

- nontrivial relation: When at least one $c_i$ is non-zero.

Note: $\vec{v}_1$, $\vec{v}_2$, …, $\vec{v}_m$ are linearly dependent if and only if there exists a nontrivial relation among $\vec{v}_1$, $\vec{v}_2$, …, $\vec{v}_m$.

This is a trivial relation:

\[0 \begin{bmatrix} 5 \\ 16 \\ 25 \end{bmatrix} + 0 \begin{bmatrix} 1 \\ 2 \\ 5 \end{bmatrix} + 0 \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix}\]This is a nontrivial relation:

\[1 \begin{bmatrix} 5 \\ 16 \\ 25 \end{bmatrix} - 5 \begin{bmatrix} 1 \\ 2 \\ 5 \end{bmatrix} - 6 \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \\ 0 \end{bmatrix}\]

Example

The vectors $\{ \begin{bmatrix} 1 \\ 6 \end{bmatrix} , \begin{bmatrix} 0 \\ 0 \end{bmatrix} \}$ are linearly dependent. ($\vec{0}$ is never part of a linearly independent set)

$\vec{0}$ is redundant:

\[0 \begin{bmatrix} 1 \\ 6 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \end{bmatrix}\]Nontrivial relation:

\[0 \begin{bmatrix} 1 \\ 6 \end{bmatrix} + 10 \begin{bmatrix} 0 \\ 0 \end{bmatrix} = \vec{0}\]

Example

The vectors $\{\begin{bmatrix} 1 \\ 6 \end{bmatrix} , \begin{bmatrix} 1 \\ 0 \end{bmatrix} \}$ are linearly independent.

There are no redundant vectors. Because if $c_1 \begin{bmatrix} 1 \\ 6 \end{bmatrix} + c_2 \begin{bmatrix} 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 0 \\ 0 \end{bmatrix}$ then $6c_1 + 0 = 0 \implies c_1 = 0$ and $0 + c_2 = 0 \implies c_2 =0$

Recall from 3.1: We found a basis for $\text{im}\left( A \right)$ by listing all columns of $A$ and omitting redundant vectors.

Let’s interpret a linear relation $v_1 \vec{v}_1 + v_2 \vec{v}_2 + \cdots + c_m \vec{v}_m = \vec{0}$ as a matrix equation.

Let $A = \begin{bmatrix} | & | & & | \\ \vec{v}_1 & \vec{v}_2 & \cdots & \vec{v}_m \\ | & | & & | \end{bmatrix}$

Linear relation: $A = \begin{bmatrix} c_1 \\ c_2 \\ \vdots \\ c_m \end{bmatrix} = \vec{0}$

Question: What does it mean to be linearly independent? For $\vec{v}_1$, … $\vec{v}_m$ and $\begin{bmatrix} c_1 \\ c_2 \\ \vdots \\ c_m \end{bmatrix} = \vec{0}$?

Answer:

- Only solution to $A\vec{x}= \vec{0}$ is $\vec{x}= \vec{0}$

- $\text{ker}\left( A \right) = \{ \vec{0} \}$ (no free variables)

- $\text{rank}\left( A \right) = m$

Linearly Dependent Collections of Vectors

$\{ \begin{bmatrix} 7 \\ 1 \end{bmatrix}, \begin{bmatrix} 14 \\ 22 \end{bmatrix} \}$ (2nd one is redundant)

$\{ \begin{bmatrix} 1 \\ 2 \\ 1 \end{bmatrix}, \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix} , \begin{bmatrix} 3 \\ 3 \\ 3 \end{bmatrix} , \begin{bmatrix} -1 \\ 11 \\ 7 \end{bmatrix} \}$ (4 vectors in $\mathbb{R}^{3}$ are dependent)

$\{ \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix} \}$ ($\vec{0}$ is in set)